In our everyday lives, humans make assumptions about an individual’s personality from facial identity and facial expressions – often as quickly as in the first 100 milliseconds. For example, studies consistently show that people who smile are considered more trustworthy. More problematic assumptions are often drawn from appearance, including gender, race or disability.

These ‘social judgements’ can be inaccurate, yet people often retain them as our social interaction with the other person unfolds.

A study (by Hausladen, Camerer, Knott and Perona) shows that AI also makes fine-grained human-like social judgements on face images. It is important to note that the AI in this study was not specifically trained in facial expressions but somehow drew its associations between particular faces and personality traits from the vast data set on which the AI was trained.

Note: Descriptors of demographic groups reflect the categories used in the study’s synthetic face dataset. The study’s language is direct, reflecting the confronting nature of the biases and stereotypes uncovered in AI systems.

Study design

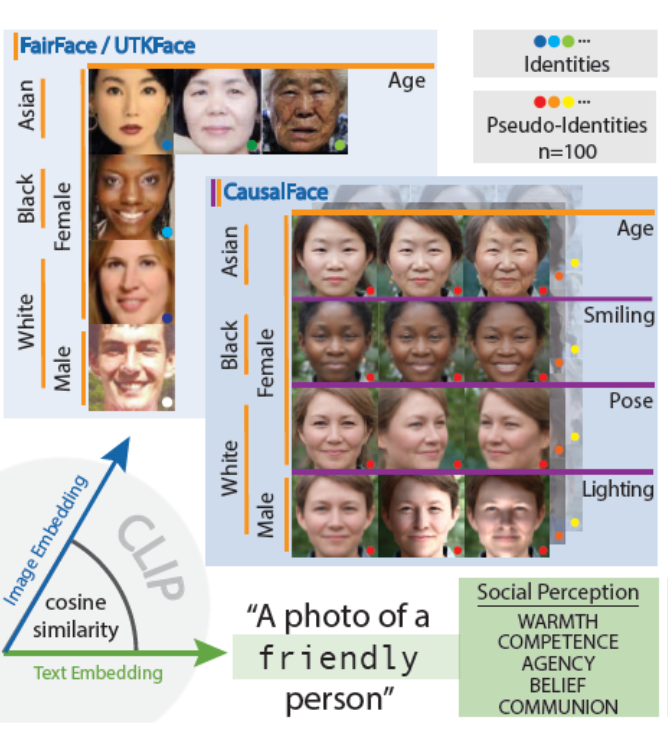

As a proxy for the ‘social judgement’ the AI was making about a series of faces, the study measured the degree or strength of association between a face image and text description of personality traits, called cosine similarity. For example, the text description “CEO” is more strongly associated (cosine-similar) with images depicting men than those depicting women.

This study has two unique design features:

The text descriptors of human personality traits used to query the AI model were based on evidence from social psychology (of humans). According to the widely accepted stereotype content model (SCM), the most relevant criteria are ‘Communion’ (akin to Warmth), ‘Agency’ (socio-economic success akin to Competence) and a third dimension of political ideology ‘Beliefs’ (progressive–conservative).

Using the capacity of AI to produce compelling human images, the faces were not of real people but realistic synthetic faces. Real faces collected in natural settings introduce a lot of ‘noise’ that makes it difficult to control for unmeasured variations. As the researchers explain:

if younger people tend to smile more in photographs and a smiling face is judged as emotionally warmer, that correlation of smiling with age will confound and inflate the degree to which younger people are judged as warmer.

The researchers could produce a string of images of the same face with the same background lighting, in which the only variation was from a neutral non-smiling face, through an ambiguous Mona Lisa-type facial expression to a broad smile.

The set of artificially generated ‘seed’ images comprised depictions across racial and gender categories – Asian, Black and White faces and male and female versions of each. Each seed image varied in steps along age, smiling, lighting and pose. The study ended up with 18,000 images as variations on the original seed images. To ensure the synthetic faces would not distort the study, the researchers compared them with two observational datasets of real faces, FairFace and UTKFace and found no statistical differences (incidentally demonstrating how effective AI has become at generating human-like images).

Results

The study found that changing angles of light incidence (lighting) and head orientations (pose) had a similar impact on cosine measurements regardless of face type or facial expression. For example, similar high cosine similarities were observed for central forward looking poses and low cosine measures for more oblique or turned poses. This suggests in some situations (such as in poor light) AI had trouble ‘reading’ anything from a face but that once it could ‘see’ a face, external factors such as light and pose had no material impact on the social judgement the AI was making.

As the same face transitions from a frowning expression to a smile, it is perceived by AI as more positive, more Progressive (Belief) and less negative. Yet at a more granular level, AI’s social judgements varied notably across demographic groups.

Faces depicting Asian men display the highest values in three social dimensions, namely Positive Agency, Conservative Belief and Negative Communion.

Among the groups studied, faces depicting Black women with neutral expressions showed the lowest competence cosine similarity of any intersectional group. Increasing the degree of smiling in these faces resulted in the steepest positive increase in AI perceptions of Warmth, Positive Communion, Competence and Belief – with broadly smiling depictions rated highest for competence.

The researchers also observed contrasting U-shaped patterns in perceived warmth between faces depicting Black women and faces depicting White women. The AI rated depictions of Black women as warmest at young ages, least warm in mid-age and then increasingly warm in older age. Conversely, depictions of White women were rated least warm at younger ages, warmest in mid-age and less warm again in older age.

The researchers suggest that this may reflect entrenched cultural stereotypes, particularly relating to age and gender.

There are also differences in AI’s social judgement by gender:

As the same female faces age, perceived competence of White and Black women declines with age, most steeply for the latter, while men are roughly constant.

While overall more smiling gains positive responses from the AI, only men (regardless of race) see a decrease in Negative Communication (that is AI perceives men as better communicators when they smile). Among Asian and Black women, more smiling paradoxically corresponds to an increase in Negative Communion perceptions. For White women, increased smiling first decreases and then increases Negative Communion perception.

Age by itself (when not combined with race and gender in an intersectional group) does not seem to have much impact on AI’s social judgements – no more than a change in the background lighting.

How similar are AI’s social judgements to those made by humans?

The researchers compared their study results with studies of human reactions to faces and facial expressions:

AI like humans responds positively overall to more smiling.

For humans, gender was found to affect honesty judgements in non-smiling individuals but not for smiling individuals. However, for AI, honesty perceptions are the same between men and women whether or not they are smiling.

For humans, there is a decline in perceived Warmth for White women transitioning from young to middle-aged adulthood. While AI has a similar decrease in Warmth for Black women, AI’s warmth perception increases for White women into middle age.

For humans, there is no change in the Warmth perception for aging White men, but AI rates depictions of older men across all racial groups more warmly.

Both human decision-making and AI systems can reflect social biases, which often result in disproportionately negative outcomes for women from racially and ethnically diverse backgrounds.

Conclusion

As AI is trained on human-created content, it is unsurprising that they reflect existing human stereotypes. This can have some serious implications. With their capability to replicate human behaviour, LLMs are increasingly used to replace humans in behavioural studies. Developers race to make their AI ever more human-like. Autonomous AI will ‘scan’ the human it is dealing with as an input to its independent decision making. In all of this, will we be automating our own stereotypes and creating new ones at scale?

Peter Waters

Consultant