AI is ‘here and now’ in our health system:

In 2015, the US Food and Drug Administration approved only six AI medical devices, but yearly approvals surged to 223 in 2023.

In 2024, two Nobels were awarded for AI-related medical breakthroughs. Google DeepMind’s Demis Hassabis and John Jumper won the Nobel Prize in Chemistry for their pioneering work on protein folding with AlphaFold, while John Hopfield and Geoffrey Hinton received the Nobel Prize in Physics for their foundational contributions to neural networks.

AI has the potential to transform healthcare through improving outcomes for both patients and clinicians, lowering costs, benefiting population health and advancing research and innovation. For consumers, AI can assist in navigating the healthcare system, providing real-time language translation and making services more accessible. For healthcare providers, it can support quality care by reducing the impact of human factors such as fatigue, burn-out and cognitive biases.

However, alongside these opportunities are concerns about the safety and risks of AI in the sector and doubts that the existing legislative and regulatory frameworks adequately mitigate the risk for harm. Key challenges include issues around data governance, privacy and ethics, all of which are amplified due to the direct impact AI can have on patient safety in healthcare settings.

What and where is AI in healthcare?

AI in health encompasses a broad range of technologies used to support healthcare delivery, management or research. These include tools developed specifically for therapeutic use and general tools that are applied in the healthcare setting. Stanford’s 2024 AI Index identifies some of the recent advances in medical AI:

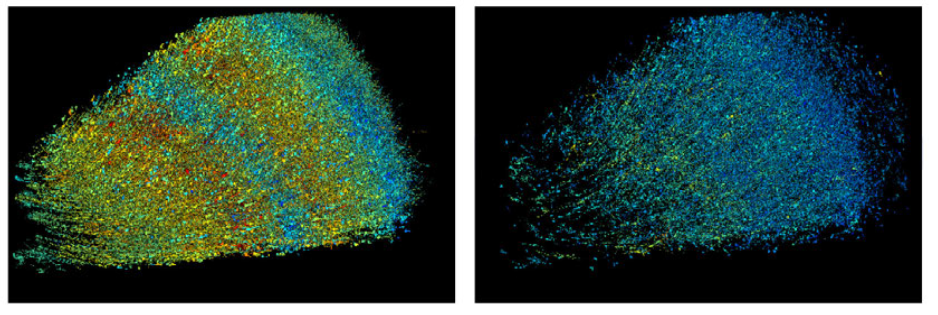

Synaptically reconstructing a small piece of the human brain: Google’s Connectomics team has reconstructed a one-cubic millimetre section of the human brain at the synaptic level – hailed by Wired as “the most detailed map of brain connections ever made”. The dataset is publicly available via Neuroglancer, a web-based exploration tool.

Virtual Lab teams: A recent Stanford study assembled a virtual AI laboratory, where multiple AI agents specialising in different disciplines can autonomously collaborate. In one experiment, human researchers tasked this AI-driven lab with designing nanobodies – antibody fragments – capable of binding to the virus that causes COVID-19.

‘Over the horizon’ predictive diagnosis: GluFormer, a foundation model developed by a team including Nvidia Tel Aviv and the Weizmann Institute, analyses continuous glucose monitoring (CGM) data to predict long-term health outcomes. In a 12-year study of 580 adults, it accurately flagged 66% of new-onset diabetes cases and 69% of cardiovascular-related deaths within their top risk quartiles.

Benefits of AI in diagnosis

The Stanford AI Index 2024 reports on a randomised trial comparing the rate of successful diagnosis in complex health scenarios by GPT-4 assisted human clinicians, clinicians without AI and AI alone. Clinicians with AI assistance performed only slightly better (a 76% diagnostic reasoning score) than clinicians who relied solely on conventional non-AI tools (74%). Notably, GPT-4 alone outperformed both groups of human clinicians, achieving a 92% diagnostic reasoning score, 16-percentage-point higher than clinicians working without AI.

However, the Stanford AI Index thought this study presented a more nuanced picture than AI being superior to humans in diagnosis:

While purely autonomous AI outperformed physician only efforts, simply giving doctors access to an LLM did not enhance their performance. This underscores a phenomenon seen in other AI-human collaborations: Bridging the gap between excellent model performance in isolation and effective synergy with clinicians requires rethinking workflows, user training and interface design.

Benefits of AI in record keeping

One area where human-AI collaboration delivers clear improvements is record keeping. The health sector has long struggled with poor patient record keeping, not only the notoriously bad handwriting of doctors, but by inconsistency and errors in what is recorded and the bureaucratic effort required in the pressured environment of medical practice. Studies have found that ambient AI scribe technologies save clinicians approximately 30 seconds per note, reducing overall time devoted to record keeping by about 20 minutes per day and reduced feelings of burden and burnout by approximately one-third.

Challenges of AI in the health system

While the adoption of AI in the health system, including in clinical care, is advancing rapidly, London School of Economics academic Divya Srivastava argues AI technologies are failing to meet evidence-based standards – the bedrock of health practice:

Generating evidence on how well digital health technologies work is an ongoing area of research in need of advancement. For example, only 1% of apps have an evidence base and some mental health apps for depression, low mood and anxiety show that they are efficacious only in research settings.

Another key concern is data privacy and security, as AI requires access to large data sets of patient medical information. The sensitive nature of patient data requires robust data protection measures and strict compliance with privacy regulations.

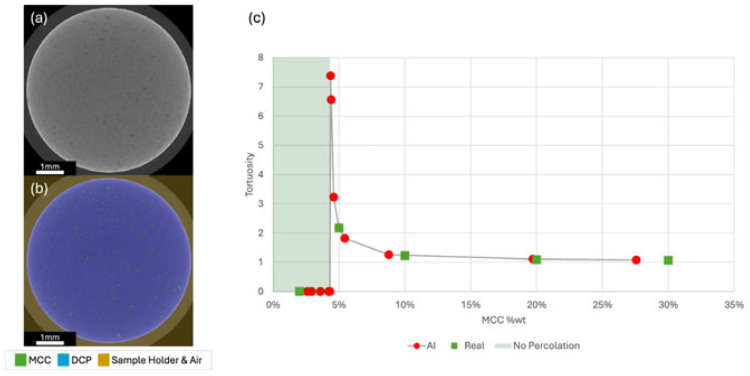

While there have been broader concerns about training AI on synthetic data, the Stanford AI Index reports “[s]ynthetic data is revolutionising healthcare by enhancing privacy-preserving analytics, clinical modelling and AI training”. A recent study in Nature found that an AI image generator trained on critical quality attributes of a drug, without any extensive physical testing data, could very closely mimic the drug release rate (the tortuosity) of real-life results of the drug testing, as depicted below:

Given AI’s powerful capabilities to correlate disparate data sets, AI can assist physicians taking a more ‘whole of patient’ approach to diagnosis and treatment, especially to identify a diagnosis and factor into the treatment plan the broad range of social determinants of health, such as income, geographic location (rural vs urban), gender, race and so on. For example, one recent study has applied AI to satellite imagery to identify and explain cancer clusters. The method was based on the insight that some cancers can be linked to demographic factors such as obesity, income, employment types and surrounding environment and satellite imagery can be correlated to these demographic factors. This method explained up to 64.37% of the variation of cancer prevalence.

However, an obvious risk in using AI to identify social determinants of health is the potential for bias. AI must be developed and trained to ensure it increases fairness and access to healthcare, rather than perpetuating existing societal injustices.

Purging bias is also an ongoing challenge beyond the initial development and training of models. Most AI applications use generative or deep-learning algorithms that continually learn from received data. These technologies outperform fixed algorithms in certain applications such as diagnosing and treating lung and breast cancer. However, these adaptive and complex algorithms bring unique challenges, in part due to a lack of understanding of how the inner-most layers work.

Regulating AI in health

The Australian Health Practitioners Regulation Agency (AHPRA) currently regulates AI within the statutory framework for medical devices, as set out in the Therapeutic Goods Act 1989 (Cth) (‘TGA’) and the Therapeutic Goods (Medical Devices) Regulations 2002. This legislation sets out a purposive definition for medical devices, encompassing any instrument, software, implant or other article that is intended to be used for human beings for the purpose of diagnosing, treating or preventing health conditions or for investigating or altering anatomy or pathology. Tools that meet this definition must be registered on the Australian Register of Therapeutic Goods and meet certain standards of safety, quality and performance. The Therapeutic Goods Administration classifies the tools according to the risk they pose to consumers depending on factors such as the invasiveness of the tool, the seriousness of the condition they treat and the level of human oversight.

However, the current regulatory framework for AI in health in Australia may not be sufficient for the emerging and dynamic nature of AI technologies, especially those that use adaptive or deep learning algorithms. In 2024, the Department of Health and Aged Care conducted a public consultation to identify areas for improvement in the existing legislative framework and leverage opportunities for AI in the sector. This consultation aimed to address specific healthcare aspects of AI, rather than the whole economy approach undertaken by the Department of Industry, Science and Resources in its proposals for introducing mandatory guardrails for AI in high-risk settings.

Most stakeholders agreed the current framework is flexible and robust, but suggested the need for improvement in certain areas, including:

Assigning and communicating roles and responsibilities for the development, deployment and use of AI products.

Enhancing transparency and information access for consumers and health professionals on AI products, including their intended purpose, performance and risks.

Developing mechanisms for continuous change, control and monitoring of adaptive AI products.

Maintaining international harmonisation and engagement with comparable jurisdictions on AI regulation.

Australia is not alone in facing the regulatory challenges of AI in health, as many other countries are developing or updating their policies and standards for AI in health. Divya Srivastava identifies three building blocks in a more pro-active approach to regulating AI in health which she describes as ‘digital health technology vigilance’.

Firstly, Srivastava observes that the rapid advancement of AI has been layered over an existing fragmented approach to regulation. She calls for a government-wide approach, bringing together key institutions and stakeholders across the health system. For example, Finland’s government has brought together a range of stakeholders to work with the government on a long-term basis in constructing a digital health strategy. Along similar lines, the Australian Medical Association has called for a national governance structure including medical practitioners, patients, AI developers, health informaticians, lawyers, healthcare administrators and medical defence organisations.

Secondly, Srivastava calls for a total product lifecycle approach, where evidence generation and evaluation of AI technologies continues throughout the pre-market to post-market stage. This will involve collecting, analysing and reporting data on the performance and impact of AI technologies in real-world settings and identifying and addressing any risks or opportunities that arise. This will require collaboration across complex AI supply chains involving healthcare organisations, regulatory bodies and AI developers in collating and analysing datasets for performance, clinical safety and adverse events.

This is unlikely to occur without regulatory enablers. The Ada Lovelace Institute recommended several specific measures for AI monitoring, including the establishment of an AI ombudsman and a remedies framework. These would require developers and downstream deployers to provide documentation and disclosure of incidents, while also ensuring regulators are well-funded and empowered to investigate and compel restriction or suspension of models of harmful impacts, misuse or security vulnerabilities.

Thirdly, Srivastava stresses the importance of international collaboration – to share best practices, learn from others’ experiences and align approaches. This is essential to avoid duplicating effort and to address cross-border issues such as data sharing, system compatibility and trade. As the majority of AI is produced or operated by overseas organisations, it is vital to coordinate with the international community. For example, the OECD proposes a mechanism for countries to share and review practices around AI and health via policy guidance and toolkits.

Peter Waters

Consultant