AI is trained on vast amounts of human-generated data to emulate human speech, behaviour and emotions. Over the next few weeks, we will explore how closely AI can mimic humans. This week, we’ll look at if AI can be trained as a digital twin of individuals; next week, do AI models have their own personalities; and in the third week, can AI independently and covertly decide to lie to us?

Upload your mind to AI

In the 2014 movie Transcendence, a dying scientist uploads his consciousness to an AI program, resulting in dangerous consequences as the sentient machine embarks on a quest for power.

A recent experiment by researchers (Stanford, Northwestern University, the University of Washington and Google DeepMind) shows that “it’s no longer science fiction: your personality – your beliefs, quirks and decision-making patterns – can be captured and brought to life inside an artificial mind”.

The study compared the answers to widely used sociological tests given by large language models (LLMs) trained with the personalities of human participants with the answers to the same questions given by those same participants.

Creating AI agents from real people

The LLMs were trained using the following process:

The researchers recruited 1,052 human participants using stratified sampling to create a representative U.S. sample across age, gender, race, region, education and political ideology.

Each participant completed a two-hour interview which was transcribed verbatim. The researchers used an interview protocol developed by sociologists as part of the American Voices Project, prompting open-ended, narrative answers covering a wide range of topics, from participants’ life stories (for example, “Tell me the story of your life – from your childhood, to education, to family and relationships and to any major life events you may have had”) to their views on current societal issues (for example, “How have you responded to the increased focus on race and/or racism and policing?”).

The interviews were conducted by an AI agent. The AI interviewer would begin by asking the scripted question verbatim. As participants respond, the AI interviewer used a language model to make dynamic decisions to draw out more from the interviewee. For instance, when asking a participant about their childhood, if the response includes a remark like, “I was born in New Hampshire… I really enjoyed nature there,” but without specifics about what they loved about the place in their childhood, the AI interviewer would ask a follow-up question such as, “Are there any particular trails or outdoor places you liked in New Hampshire or had memorable experiences in as a child?”

When the LLM was instructed to provide a response mimicking a particular human participant, the prompt would be loaded up with the full transcript of the interview which the LLM then would process in developing its response to the prompt question.

The researchers hypothesised that the LLM could apply its knowledge of human behaviour embedded in its parameters (the mathematical correlations from the vast store of information on which the LLM was trained, including sociological and psychological works) with the rich information about each individual study participant gained from the interview, to predict how the individual would answer questions in the standard studies.

To test this, two more limited sets of information about each participant was used in the prompts rather than the full interview:

Only the individual’s demographic information (age, gender, race and political ideology).

A brief paragraph written by each study participant about themselves, including their personal background, personality and demographic details.

How good is AI at second guessing humans?

The researchers used four well-known sociologist tests (described by the researchers as ‘canonical’ in social research):

General Social Survey (GSS) provides data on the social characteristics, wellbeing and social experiences of people (the Australian Bureau of Statistics used the GSS).

Big Five Inventory assesses five personality dimensions: openness, conscientiousness, extraversion, agreeableness and neuroticism, and is often used by employers as part of an interview process.

Five well-known behavioural economic games, including the dictator game, trust game, public goods game and prisoner’s dilemma, which aim to predict how people actually behave by incorporating psychological elements and learning into game theory.

To account for varying levels in self-consistency among the participants, each was asked to complete the tests twice, two weeks apart, and their results were normalised.

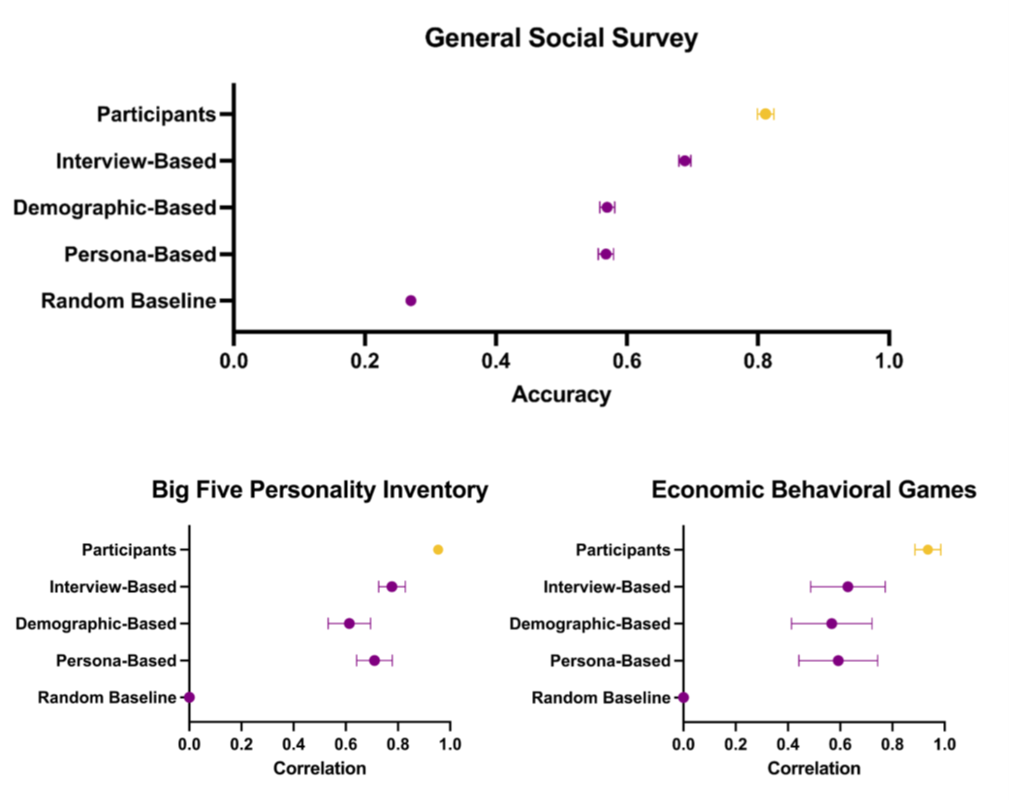

The following graphs show the correlation between the answers given by the humans and the responses given by the AI trained on the full interview, the AI trained only the demographic data and the AI trained only the persona data (the single self-drafted paragraph):

The GSS results showed the ‘raw’ accuracy between answers of interview-trained AI and the human participant was 68.8% (as shown in the graph). However, as the participants were internally consistent in their own answers 81% of the time (also depicted in the graph), the researchers derived a normalised average consistency between machine and human of 85%.

The interview-based agents significantly outperformed both demographic-based and persona-based AI agents by a margin of 14-15 percentage points.

The interview-based agents also were impressive in their alignment with the human participants in reflecting their personality traits, with 80% correlation.

However, the interview-based AI struggled predicting human behaviour in the economic behaviour games (only 66%) and the accuracy of their predictions was not much better than AI trained on the more limited demographic and persona information.

The researchers also tested the impact of interview content volume and style by:

Randomly removing 80% of the interview transcript (equivalent to removing 96 minutes of the 120-minute interview). However, the AI still achieved 79% alignment with the participants’ answers.

Using an AI generated summary of the full interview. The AI still achieved 83% alignment with the participants’ answers.

The researchers conclude that “when informing language models about human behavior, interviews are more effective and efficient than survey-based methods”.

These results also show that AI does not need to know much about you to be able to successfully second-guess you: probably because the AI can put you under the microscope of its comprehensive knowledge of human behaviour it has been trained on.

The upside

The researchers argue that better human behavioural simulation achieved by their interview-based approach could enable broad applications in policymaking and social science:

How might, for instance, a diverse set of individuals respond to new public health policies and messages, react to product launches or respond to major shocks? When simulated individuals are combined into collectives, these simulations could help pilot interventions, develop complex theories capturing nuanced causal and contextual interactions and expand our understanding of structures like institutions and networks across domains such as economics, sociology, organisations and political science.

The study results suggest that an interview-based approach may help reduce the problem of biases in AI training. The researchers compared the Demographic Parity Difference (DPD), which measures the difference in performance between the best and worst performing groups, using the interview-trained AI and compared to mitigation methods using demographic prompts. On the GSS, the racial DPD spread almost halved from 12.35% to 7.85%; on the personality traits, the racial DPD fell by 60%; but on the games, the racial DPD fell by only by 7%.

The researchers noted that gender-based DPD remained relatively constant across tasks, likely due to its already low level of discrepancy in the first place.

The (obvious) downside

As Stanford’s Human-Centered AI Centre notes, this AI training methodology could “raise concerns about deepfake videos, co-option of individuals’ likenesses and a world where people have conversations with AI versions of their friends or relatives”.

The lead researcher, Joon Sung Park, commented “like our genomic data, [the generative agent] should belong to and be controlled by the person whose portrait it represents”. Given the amount of personal information many of us freely post on social media, AI may well be able to readily harvest enough information about an individual’s personality to mimic them without the need for an extensive sit-down interview.

Peter Waters

Consultant