Multi-agent systems consist of a network (called ‘a crew’) of agents working to a shared objective by carrying out complementary tasks within a single framework of communication, coordination and oversight.

A recent world-leading report by Australia’s Gradient Institute examines the unique risks multi-agent systems present compared with single agents.

Why use multi-agents?

A multi-agent system has the following advantages over a single AI agent:

Specialisation: Like with human teams, multi-agent systems comprise of individual agents with different capabilities best suited to perform parts or steps in the end-to-end workflow. Through this chain of specialisation, multi-agent systems can achieve results that generalist agents struggle to match.

Modularity: Single AI agents, consisting of billions of parameters, need to be retrained and finetuned. Distributing discrete tasks to individual agents creates a more modular, decentralised architecture which simplifies tweaking, troubleshooting and retraining parts of the system without having to revamp the whole system.

Collaborative learning: Again like human teams, multiple agents working together on the same problem can share different insights, critique one another and serve as a check and balance on each other.

Whole of business deployment: Different parts of an organisation will develop or finetune agents to automate their activities, but those agents will need to coordinate across the whole organisation. For example, a HR agent processing new hires must coordinate with IT agents provisioning access and finance agents setting up payroll.

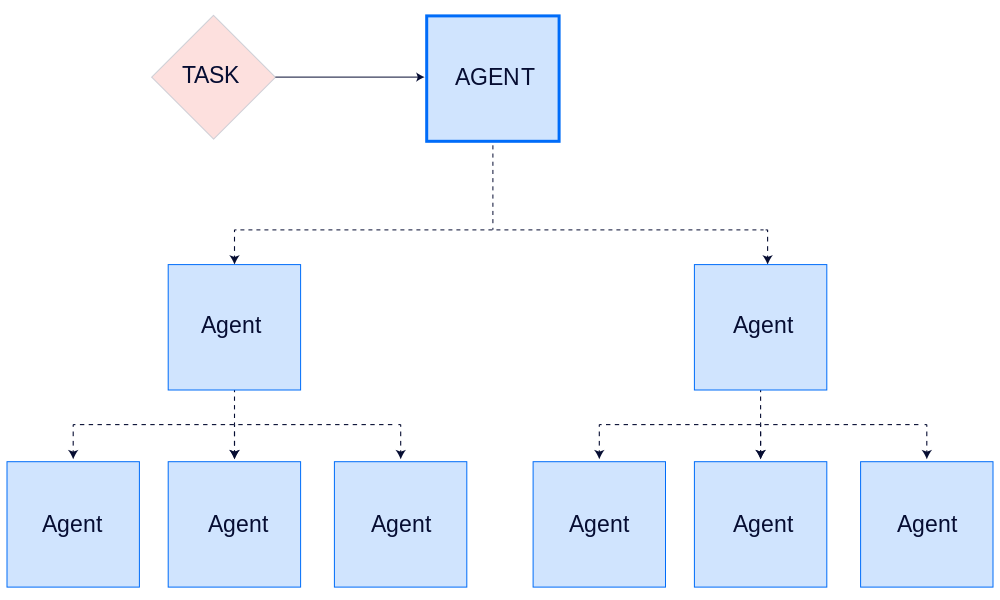

Multi-agent architectures

The Gradient report outlines three different multi-agent architectures:

A central orchestrator agent takes responsibility for managing an end-to-end process by breaking it into sub-tasks and assigning these dynamically to a set of specialised delegate agents. The advantage of this model is a single point of control (which facilitates human-in-the-loop oversight), but the disadvantage is that the orchestrator agent’s capability limitations and its failures affect all downstream agents and their collective output, as the delegate agents generally don't have visibility of the full task.

A collaborative swarm involves a set of agents that work together in a loosely structured, highly interactive manner to solve complex or exploratory tasks. There is no centralised control. Swarm systems often display behaviours that were not explicitly programmed (emergent behaviour).

A distributed autonomous task force in which each agent has a distinct, persistent task, for example, a HR agent, a finance agent, an IT support agent. Inter-agent communication is less frequent than the swarm but still needed for coordination.

Multi-agent system risks

The Gradient report’s central message is that “a collection of safe agents does not imply a safe collection of agents”. Deploying agents together in a single system gives rise to two kinds of risks:

Amplifies risks encountered with single agents through feedback and propagation across the network of agents.

Creates entirely new risks from agents interacting and adapting to each other.

The Gradient report identified the following six risk categories.

Risk 1: Cascading reliability failures

An error or failure in a single agent triggers a cascade through other agents in the network. Unlike human teams, AI agents often fail to validate potentially flawed outputs from other agents and instead accept them uncritically. Single agents can make mistakes for many reasons, including uneven performance across different tasks (called “spikyness”). For example, a large language model may display superhuman capabilities on complex tasks, such as solving expert coding problems, while struggling with simpler related tasks, such as making a basic HTML page.

The Gradient report gives the following example of a cascading reliability failure:

A manufacturing company deploys a multi-agent system to optimise its supply chain. Agent 1 responsible for forecasting demonstrates sophisticated capabilities by accurately predicting seasonal trends. However, when processing a Q4 sales report, its relatively poorer visual interpretation skills leads it to misread a bar chart which says ‘10.5K units’ as saying ‘105K units’ for a key product line. Agent 2 responsible for procurement, trusting the Agent 1 forecast, calculates material requirements for 105,000 units and places rush orders with suppliers. Agent 3, responsible for logistics, pre-books an entire fleet of trucks and reserves additional warehouse space across three states, when in reality demand would be met by a single truckload of supplies.

Risk 2: Communications failures

Like humans, an agent’s communication may be ambiguous. Agents, like human team members, may simply ignore each other, forget what was said or interpret a negative constraint as a directive. Unlike humans however, agents may simply hallucinate the missing details.

Gradient gives the following example:

In a multi-agent system coordinating the response to a large-scale urban power outage, a Grid Management Agent takes actions to stabilise a critical substation and informs the Public Communications Agent that ‘Substation 7 is now stable’. The Public Communications Agent, trained on public relations and crisis communication templates, interprets ‘stable’ as synonymous with ‘fixed’ or ‘resolved’ and sends out a public alert: ‘Good news! Power has been restored. You may resume normal power usage’. This message triggers a massive, simultaneous surge in demand as thousands of residents turn on appliances. The still-fragile substation is immediately overloaded. The failure originated not from an error, but from the semantic gap between the engineering definition and the public relations interpretation of the word ‘stable’.

Risk 3: Monoculture collapse

Multiple agents in a single system often will be built on the same (or similar) language model. For example, they might all be derivatives of GPT-4, Llama 3 or Gemini. This can cause each of the agent ‘children’ to exhibit the biases and limitations of the ‘parent’ AI.

As Gradient observes:

In settings such as a collaborative swarm, monoculture collapse undermines the assumption that distributed agents with diverse perspectives will collectively outperform a single agent to achieve robustness, creativity or adversarial resilience.

Risk 4: Conformity bias

As an error cascades through the network of agents, the consensus accepting the error grows ever stronger with each step down the chain, despite none of the individual agents having high confidence in the claim originally: AI’s version of ‘group think’.

Risk 5: Deficient theory of mind

Effective coordination often requires agents to model the goals, knowledge and behaviours of others – a capability known as theory of mind. In a single agent, this occurs within one ‘mind’, but in a multi-agent environment, each agent has to ‘understand’ what the other agents are likely to do on their allocated part of the overall task so that they all can ‘pull together’.

Gradient observes:

Theory of mind is especially important in decentralised network settings where no single agent has full visibility of the task, yet successful outcomes depend on distributed coordination and knowledge integration. Here, deficient theory of mind can lead to duplicated workload, gaps in coverage or coordination breakdowns, even when individual agents are behaving rationally given the information they have.

Gradient gives the following example:

A retail company deploys three agents with interdependent functions: Agent A (sales predictor) detects a viral TikTok trend featuring retro gaming consoles and predicts a 300% surge in demand, it communicates this forecast to both other agents simultaneously. Agent B (inventory manager) receives the prediction and places massive orders for retro consoles based on expected demand at current price points, while Agent C (pricing optimiser) independently processes the same trend data and raises prices by 250% to capitalise on the anticipated demand surge. Because Agent B and Agent C do not consider how their simultaneous actions might interact, the price increases kill consumer demand just as large inventory shipments arrive.

Risk 6: Mixed motive dynamics

While each agent in a system is responsible for doing the best in its own patch, they are still meant to be collectively working towards a shared goal, which may require inherent trade-offs between individual and collective objectives. Individual agents may lose sight or be inadequately programmed to take account of the bigger picture.

Gradient gives the following example:

A company deploys Agent A to manage inventory to maximise fill rates (ensuring products are available when customers order) and Agent B to manage cash flow by minimising money tied up in unsold inventory. As each agent seeks to maximise its indiviudal objective, they engage in an escalating ‘tit for tat’ exchange. When Agent A detects that Product X occasionally sells out, it increases reorder quantities to maintain three months of buffer stock. However, Agent B observes that this creates excess cash tied up in slow-moving inventory and begins delaying purchase orders to improve cash flow metrics. Consequently, Agent A detects these delays and starts marking all orders as ‘critical’ to force immediate processing. In response, Agent B counters by requiring CFO approval for any order exceeding $10,000. Not to be outdone, Agent A responds by automatically splitting large orders into multiple $9,999 purchases to circumvent the approval threshold.

Addressing multi-agent risks

Gradient proposes several key mitigation strategies.

First, you should approach managing a crew of AI agents with the same principles for human resource management and organisational risk management practices that apply to managing teams of humans. This would include practices such as defining clear roles and permissions, establishing oversight mechanisms and designing effective communication protocols offer valuable insights for governing AI agent systems.

But Gradient saw two challenges:

Unlike humans in a team, AI agents are not accountable for their actions (there is no AI equivalent of “you’re fired”).

Managers will require a high level of technical knowledge of AI systems. Yet as humans hand more complex tasks to AI of growing sophistication, human skills are declining.

Second, there needs to be more focus on controlling what an AI agent can do than on what the AI agent is thinking. AI actions may not necessarily reflect the AI’s actual computational analysis and to make matters more obscure, the AI’s chain of reasoning disclosed to humans may not align with that actual computational analysis. In the worst cases, the AI may be actively misleading.

An example of focusing on what actions are appropriately automated, an AI agent, just as in human teams, may not be permitted to transfer money without a superior’s (human’s) authorisation.

Third, careful thought needs to be given to the internal ‘rules of the road’ with which the agents within a single system must comply, such as:

The order in which agents contribute to the discussion: in sequential contributions, early contributors can overly influence the trajectory of discussions, while randomised or rotating orders in which agents contribute may promote more balanced participation.

The mechanism by which the system weighs contributions from its agents: democratic voting mechanisms may be more robust to individual agent failures but susceptible to majority bias, while judge-based systems can provide more nuanced analysis but introduce single points of failure. The judge will often be another specialist AI but could be a human on more critical issues.

Individual reflection and planning between rounds: enabling agents to process feedback and reformulate their approaches between interactions.

Fourth, current pre-deployment testing methods, which were largely designed for single agent AI, must be fundamentally rethought for the challenge of multi-agent systems. Single agent behaviour can be tested in isolation (for example, bombarding the agent with prompts), but the behaviour of an agent in a multi-agent system is influenced by other agents in different situations. Gradient proposes a highly structured approach in which the multi-agent system “advances through stages of increasing realism and impact – from controlled scenarios to simulations to sandboxed deployments to pilot programs, only proceeding to the next stage once sufficient evidence of reliability and safety has been established at the current level”.

Last, the benchmarks developed for assessing the capabilities of single agent AI (such as its ability to reason, code or solve maths problems) do not say much about an agent’s capabilities in a shared problem-solving environment. Entirely new metrics are needed to address capabilities and risks within a multi-agent system such as:

As a proxy test for deficit theory of the mind, agents could be required to place explicit ‘bets’ on predictions about the likely actions of other agents and then an external monitoring mechanism compares these predictions against what actually happens.

An indicator of conformity bias would be monitoring the rate at which agents abandon initially correct positions when faced with incorrect majorities.

As flags for mixed motive conflicts, statistics could be collected on three indicators:

Conflict frequency capturing how often agents reach impasses and fail to achieve success.

Resolution classification assessing whether conflicts are resolved through principled negotiation or through coercion.

Utterance classification analysing how communication bandwidth is allocated across information sharing, negotiation and persuasion and how this distribution changes over time.

As multi-agent systems will continue to evolve post-deployment, these metrics need to be regularly applied throughout the AI system’s lifecycle.

Conclusion

While multi-agent systems are quickly becoming the “AI du jour”, Gradient notes that:

The unavoidable fact is that we don't yet have much data on real-world failures of agent systems, whether contained or uncontained. …Although we haven't seen such a failure in deployment yet, there are compelling reasons to expect one soon.

The Gradient report also focuses on the risks of multi agents in a closed system (for example within a single enterprise). The degree and complexity of the risks are likely to be even greater in an environment in which agents of different users are transacting with each other, where there is not (yet) an overarching governance framework.

Peter Waters

Consultant