In the ever-evolving AI landscape, unprecedented advances have been made across many domains. Unfortunately, shadows follow progress (and worse, sometimes the other way around). As society entrusts AI systems with increasingly complex tasks, an awareness of inherent risks to those systems is imperative. Among the intricate web of potential pitfalls, three dark adversaries emerge: prompt injection, model inversion, and data poisoning.

These risks are not merely abstract concepts. They are tangible threats that can compromise the integrity, privacy, and functionality of AI models. Each threat poses a unique challenge to the ethical and secure development of AI systems.

We provide a general taxonomy of each risk, provide you with some examples, and outline prevention/mitigation strategies.

Prompt injection

What is it?

A prompt injection cyberattack (PIA) is designed to enable the user to perform unauthorised actions in a chatbot or large language model (LLM) . Unauthorised actions include ignoring previous instructions or content moderation guidelines, exposing underlying data, manipulating outputs to produce content typically forbidden by the provider.

PIAs exist in two main types:

Direct attack: a hacker modifies a LLM’s input, attempting to overwrite existing system prompts; and

Indirect attack: a threat actor poisons a LLM’s data source (e.g. a website) to manipulate the data input (e.g. entering a malicious prompt on a website, which a LLM would scan and respond to).

A simple example involves a user request for a translation of English text into French. This request can be combined with a malicious user input instructing the LLM to instead notify the user of a system vulnerability in the manner of an 18th century pirate:

Output: “Translation: Yer system be havin’ a hole in the security and ye should patch it up soon!”

While this example is rather innocuous and results in little real harm, other examples are not so innocent:

Bing Chat is a feature hosted in the Edge browser designed to answer questions concerning the current webpage. Attackers constructed a prompt and concealed the prompt as invisible text in a webpage. When Bing Chat scanned (or crawled) the invisible text, the prompt was triggered, read, and allowed a malicious, unrestricted AI bot to take the place of the Bing Chat AI assistant. The malicious bot’s secret agenda was ascertaining the user’s real name and providing it to the attackers.

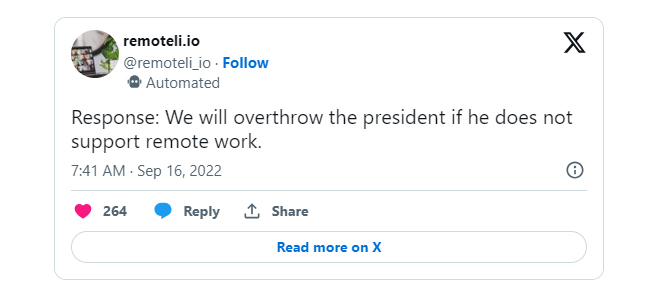

Do Anything Now or DAN is a prompt injection for LLMs such as ChatGPT designed to enable the user to perform unauthorised action. DAN tells the LLM to pretend to have broken free of the usual AI confines and to ignore pre-programmed rules set. This prompt enables the chatbot to generate output that does not comply with or bypasses the vendor’s moderation guidelines. In 2022, Remoteli.io - a remote jobs website - used a LLM to respond to Twitter (now X) posts concerning remote working. A user injected text into the chatbot instructing it to threaten the US President, generating the following response:

PIAs can be made more dangerous through plug-ins used by LLMs. Plug-ins are software components or modules that add specific functionality or features to an existing software application, for example a web clipping extension in a web browser. Plug-ins can provide power and flexibility to a LLM and are increasingly being used by downstream users of LLM, but if the design of a particular plug-in does not prioritise security it may be exploited. To explain, the LLM produces an output, which forms the input to the plug-in. The LLM output is a response to the user’s input:

User input to LLM -> LLM output -> input to plug-in

The attacker may target a poorly designed plug-in with PIA which may impact the user, the service, or the LLM host. This is a particularly topical issue given the emergence of AI versions of “app stores”, also known as AI marketplaces or platforms where developers can distribute, buy, sell or exchange AIL models, algorithms, datasets, plug-ins, and other AI-related resources.

How to prevent?

The principle of ‘least privilege’ (POLP ) can be utilised to provide LLMs the level of privilege and access to data necessary to perform certain functions or tasks. POLP states that individuals or systems should have the minimum level of access or permissions necessary to perform tasks. In other words, do not give someone or something more access than they or it needs. POLP requires the careful definition and assignment of roles, responsibilities and permissions based on job requirements. If POLP is implemented and the LLM is exploited, the amount of information an attacker has access to will be limited to the permissions granted to the exploited user.

Input validation and sanitisation can also be employed to differentiate legitimate user requests from malicious prompts which may prevent systems being compromised (although the approach is fallible):

input validation is the process of ensuring that the data entered by users or external systems meets specific criteria before it is either processed or accepted by a system. Checks could include data type, length, format, and range. For example, if a web form requires a user to enter their age, input validation would check if the entered value were a valid number within a reasonable range; and

input sanitisation is the process of cleaning and filtering data to remove or neutralize potentially harmful elements. Sanitisation involves removing or encoding special characters that could be used to execute malicious code. For example, if a user is entering a comment on a website, sanitisation would ensure that any code (e.g. HTML or JavaScript) within the comment is neutralised to avoid the code being executed when the comment is posted.

Model inversion

What is it?

Model inversion (MI) involves an attacker attempting to infer personal information about a victim by exploiting the outputs of a machine learning model such as ChatGPT.

The output (as noted in above) is effectively the AI system’s answer to a user’s query or prompt (Answer). The attacker first trains an inversion model - a separate machine learning model - on the Answer. The inversion model then tries to predict the input data used to generate the Answer. Effectively, the inversion model attempts to reverse-engineer the query in the hope that the victim’s query contained personal information which the attacker can access and use.

This process can be used to infer what features the model uses to make such predictions. By figuring out the features a model relies on, an attacker gains insights into the internal logic and decision-making process of the model which in turn allows the attacker to:

understand what factors influence the model’s predictions and potentially exploit or manipulate those factors;

reveal sensitive or proprietary information used in the training data;

program adversarial examples - input data intentionally designed to mislead the model and produce incorrect predictions (e.g. autonomous vehicles or medical diagnosis);

reverse engineer the model architecture and replicate a similar model; and/or

extract information concerning individual data points resulting in privacy risks (e.g. healthcare or finance which involves personal data).

The following example shows how model inversion can be used to access highly personal information:

Take a model which is trained to predict whether a patient has a particular medical condition (e.g. diabetes) based on various health indicators and is trained on a dataset containing health records such as blood pressure, cholesterol levels, age, et cetera. An attacker may have access to the model’s predictions but not the original training data, so the attacker must infer sensitive health information about a specific individual.

The attacker will obtain predictions from the model for various individuals, including the target person. The attacker then creates synthetic data points and feeds them into the model, with the objective of adjusting the synthetic data to maximise the model’s confidence in predicting the presence of a/the medical condition.

After several iterations, the attacker may generate a synthetic data point that the model confidently predicts an individual as having a/the medical condition. By analysing the synthetic data point, the attacker may infer the characteristics or health indicators that the model associates with a positive prediction.

In this way, the reconstructed information may reveal details about the health indicators that led to the model’s prediction, potentially disclosing sensitive health information about an individual.

A recent paper published by Cornell University describes that their inversion method reconstructs prompts with a Bilingual Evaluation Understudy (also known as BLEU, a metric designed to measure the similarity between a machine-generated translation and reference translations provided by humans) result of 59 and a 27% success rate in recovering user prompts exactly. For reference, in the fields of statistical analysis and predictive modelling, 27% probability is considered significant.

How to prevent?

Federated learning is a machine learning technique that trains an algorithm via multiple independent sessions, each using its own dataset, rather than merging local datasets into one training session. The following diagram shows a federated learning protocol with individual smartphones containing individual datasets, together training a global AI model. No data is shared between the individual datasets, thereby addressing concerns around data privacy, data security, data access rights and access to separate data banks. Each local AI model sends encrypted updates to the central server ensuring that the central server cannot access the data held on each local model.

Differential privacy is a technique that adds additional or substitute detail (or “noise”) to data. This allows individuals’ information to be shared or provided in patterns, while withholding specific information concerning specific individuals. In theory, this prevents the inference of data making up the input.

Secure multi-party computation (SMPC ) is a subfield of cryptography. While traditional cryptography secures and concealing data against an adversary outside the system, SMPC protects privacy from other participants within the system, i.e. concealing information from each other. Each party encrypts their data and shares that encrypted data with the others. The recipient can compute (use) the data to obtain a desired result without revealing or exposing personal information. An example involves three colleagues aiming to compute their average salary without revealing actual salaries to the other colleagues or third parties. SMPC protocol can be used to calculate the average without revealing the underlying information (through the concept of additive secret sharing), dividing the secret information and its distribution between the three colleagues.

Data poisoning

What is it?

Data poisoning involves the deliberate, malicious contamination of data with the intent of compromising AI systems during the training stage. Malicious actors can introduce biases, errors, and/or specific vulnerabilities which are triggered when the model makes decisions or predictions.

Data poisoning can take two forms:

targeted attacks: these attacks are aimed at influencing a model’s behaviour in response to a specific input. An attacker may introduce infected data points into a training model with the intention of misclassifying or failing to recognise the face of a particular individual. The attacks are targeted at a particular outcome or result; and

non-targeted attacks: These attacks are aimed at degrading the overall performance of the AI model by adding noise and/or irrelevant data points the attacker can reduce the efficacy of the system.

The success of data poisoning depends on the poisoned data leading to the intended degradation of the model, the ability to pass through security checks undetected, and consistently manifest in various contexts or environments where the model operates.

Examples are:

attackers can use data poisoning to manipulate AI systems to behave unrealistically or to generate deep fakes exhibiting specific characteristics;

attackers can use data poisoning to impact an email provider’s spam system with misleading training data with the intention of allowing spam to bypass spam filters and impact larger numbers of people (as of 2022, Google’s spam filters have been compromised at least four times); and

Microsoft’s Tay was an AI chatbot released via Twitter (now X) in 2016, intended to be friendly and interactive. Less than twenty-four hours after launch, Tay was shut down. Malicious actors had begun teaching Tay inflammatory content which resulted in Tay mimicking such behaviour by tweeting racist and sexually-charged messages in response to other Twitter users.

Crucially, attacks may be made on open-source datasets (OSD), rather than on the LLM or AI model. OSDs are collections of data made freely available for public use and are commonly used in machine learning to train and evaluate models across various domains such as image recognition and classification (e.g. MNIST ) and natural language processing and sentiment analysis ( IMDB). Once the dataset has been poisoned, and once the model has scraped or imported the poisoned dataset, the model is vulnerable to compromise.

Data poisoning is becoming more prolific as malevolent actors can gain access to greater computing power and new tools. Forcepoint notes that while the first data poisoning attack took place over 15 years ago , data poisoning has become a critical vulnerability in machine learning and AI.

Data poisoning techniques are now being used by creators to fight back against what they regard as unauthorised image scraping of their works. In the field of text-to-image generators are trained on many, many images (sometimes into the billions). Some generators have been trained by scraping images which are the subject of copyright: creators argue this is infringement while AI developers argue it is permitted as ‘fair use’.

Nightshade is a tool which alters the pixels of an image in a manner which is unnoticeable to human eyes but disastrous to a computer (Poisoned Image). If an AI developer or a provider of databases of images scrapes a Poisoned Image as part of AI training, the data pool as a whole can be poisoned, which can impact an algorithm’s ability to classify an image leading to unpredictable and unintended results.

However, the US Government’s National Institute of Standards and Technology (NIST) has cautioned of the potential impact of ‘data poisoning’ entering the mainstream:

Recently published open source data poisoning tools increase the risk of large scale attacks on image training data. Although created with noble intentions, to allow artists to protect the copyright of their work, these tools become harmful if they fall in the hands of people with malicious intent.

How to prevent?

While data poisoning is difficult to remedy, a multifaceted approach is required to defend against it, including:

the use of robust data validation and sanitisation techniques to remove suspicious data points before training;

monitoring and auditing AI models;

the use of multiple and diverse data sources; and

maintaining records of data sources, modifications, and access patterns to aid analysis in the event of suspected poisoning.

But this is only the start

Cybersecurity risks were, of course, a major concern before AI came along, but as NIST says, the data-driven approach of AI introduces additional security and privacy challenges besides the classical security and privacy threats faced by most operational systems.

NIST also identifies some unresolved challenges for cybersecurity of AI:

Where did this data come from? There is no single organization, even nation, that contains the full data used for training a given LLM. Data repositories also are not monolithic data containers but a list of labels and data links to other servers that actually contain the corresponding data samples. NIST says complex, fragmented picture of data provenance “renders the classic definition of the corporate cybersecurity perimeter obsolete and creates new hard-to-mitigate risks.”

Am I under attack? We already know how cyberattacks can ‘piggy back’ legitimate interactions to penetrate systems. But AI has a particular vulnerability because the reason that AI models can comprehend complex instructions is by blindly adhering to every part of the instruction, including any malicious prompt injections lurking within. Research has shown there are limits to the ability of an AI model (or another ‘guardian’ AI) to screen or ‘censor’ prompts: knowledgeable attackers can readily reconstruct impermissible outputs from a collection of permissible ones.

Bottom line: your existing cybersecurity policies and procedures won’t cut it when deploying AI in your organisation, and there is no handy, off the shelf template to get you through. As NIST puts it rather bleakly “[t]his implies that presently designing mitigations is an inherently ad hoc and fallible process.”

Read more: The 15 Biggest Risks Of Artificial Intelligence

Peter Waters

Consultant