In mid-March 2024, Stability AI filed its defence in the UK proceedings brought by Getty Images alleging that training the Stable Diffusion AI model using images scrapped from Getty databases breaches Getty’s intellectual property rights (copyright and trade mark). This week we summarise the arguments made by each side in their initial pleadings.

How are diffusion models trained?

There appears to be high level agreement between Getty Images and Stable AI about how diffusion models are trained, but they differ of course over the legal implications of that technology.

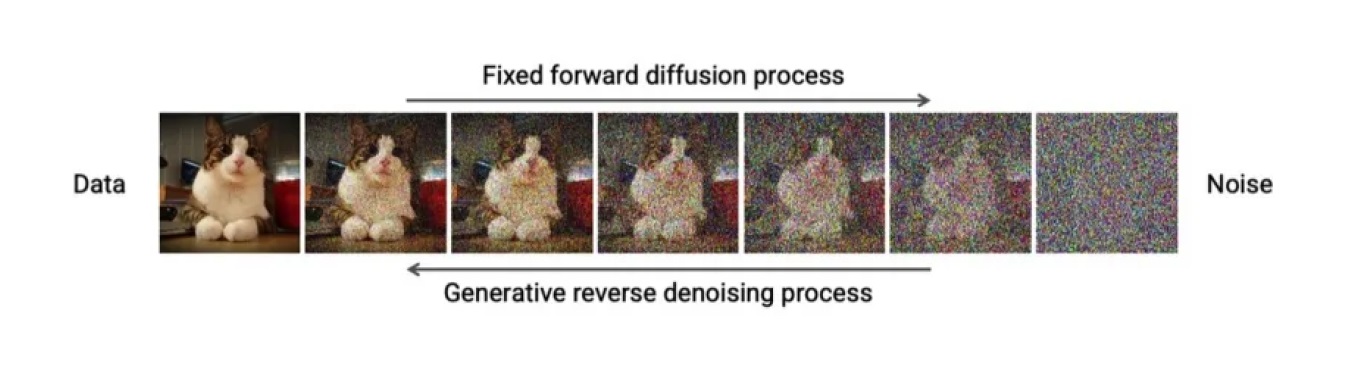

Diffusion models learn by essentially ‘blowing up’ images. As depicted below , images in the training dataset undergo a process of diffusion in which ‘noise’ is incrementally introduced to disperse the pixels (or other even finer points) into large arrays of random static that no longer resemble the images. After diffusion occurs, the model essentially teaches itself to undo the diffusion and restore the images to their original state.

By analysing enough (maybe millions of) individual images of a cat in this way, the diffusion model is able to identify, expressed mathematically, what a cat looks like. Once the pre-trained diffusion model is made available, it will in response to a user prompt requesting an image of a cat wearing a hat eating ice cream to call on what it has learnt about cats, hats and ice cream to generate the requested synthetic image.

Getty's case

In its Amended Statement of Particulars, Getty Images says that between 2017 and 2020 the group invested nearly US$ 1 billion in building and maintaining its databases of images (gettyimages and istock), which it licences to “creative, corporate and media customers in more than 200 countries around the world, with that content helping its customers to produce work which appears on a daily basis in the world’s most influential newspapers, magazines, advertising campaigns, films, television programs, books and websites.” This included over US$150 million in fees paid by Getty Images to acquire rights from third party content producers.

Getty Images describes its curation process, involving a large number of employees who verify each individual image for compliance with legal, moral and content standards, apply a caption and metadata to each image, catalogue it, and then conduct quality checks before posting the image to its websites. Each image accessible on Getty websites displays a watermark that contains a Getty trade mark, and Getty says the watermark reflects the reputation of high quality images Getty has built up through its investment in this curation process.

Images on Getty websites can be viewed for free but use of the image requires a licence. There are annually about 2.7 billion searches globally of Getty websites.Getty Images says that its heavy investment in its image databases is the very reason they are so attractive to AI developers, and while some AI developers are prepared to pay for access others have taken copyrighted images for free:

The photographs that appear on the Getty Images Websites are highly desirable for use in connection with artificial intelligence and machine learning because of their high quality, and because they are accompanied by content specific, detailed captions and rich metadata. With appropriate safeguards for the rights and interests of its photographers and contributors and the subjects of the images in its collection, Getty Images also licenses the use of some of its images and associated metadata in connection with the development of artificial intelligence and machine learning tools. Getty Images has licensed millions of digital assets to leading technology innovators for a variety of purposes related to artificial intelligence and machine learning.

Stable Diffusion was trained using a vast collection of images (numbering 3-5 billion) from a data warehouse, LAION, which is a German-based association established to provide datasets for use in training AI models. The LAION datasets do not contain the actual images, but instead comprise web links to photographs and videos, together with associated captions, scrapped from the web sites (the ‘Scrapped Links’), including from Pinterest, WordPress-hosted blogs, SmugMug, Blogspot, Flickr, Wikimedia, Tumblr and the Getty websites. Getty Images says that it so far has identified Scrapped Links to 12 million Getty copyrighted used in training Stable Diffusion obtained without its consent.

Getty Images argues that the diffusion process described above inherently involves a chain of sequential copying of Getty Images from assembling of the training dataset through the diffusion process and onto the outputs in response to individual user prompts:

To create the training dataset, Stable Diffusion used the Scraped Links to visit the location of, and copy, Getty images. That content (“the Copied Content”) was downloaded and stored by Stability AI in order to proceed with the subsequent steps set out below.

Stability AI then encodes the Copied Content, which involves pixelating and distorting the Copied Content to take up less memory, and separately encodes the paired text (e.g. captions), thereby creating and storing further copies of the Copied Content (“the Encoded Content”).

In the way described above, Stability AI adds “noise” to the Encoded Content in a series of steps (“the Noisy Copies”). This step involves the creation and storing of further copies of the Copied Content and the Encoded Content.

Again, in the way described above, Stability AI decodes each of the Noisy Copies back to a useable form (“the Decoded Noisy Copies”) which trains the model to deliver images matching the subject matter of the Copied Content, conditioned on encoded text. This step involves the creation and storing of further copies of the Copied Content, the Encoded Content, and the Noisy Copies.

Stability AI decodes the Decoded Noisy Copies again in order to scale each image up to a higher resolution (“the Decoded Copies”), thereby creating and storing further copies of the Copied Content, the Encoded Content, the Noisy Copies and the Decoded Noisy Copies.

Stability AI compares the Copied Content and the Decoded Copies and the difference in pixels used to update the neural network weights. This process is repeated thousands of times for each image with different levels of noise at each iteration, to teach the model weights how to recover an image from noise conditioned with semantic text caption information.

The trained model is then made available to users to produce synthetic images in response to text and/or image prompts, drawing on the above programming.

Through this chain, Getty alleges the synthetic image output in response to a user prompt comprises a substantial part of one or more of the Getty copyrighted works, illustrated by their close similarity with Getty images on its websites, even in some cases with the output bearing the sign GETTY IMAGES and/or ISTOCK as a watermark. Getty Images argues that this also involves breach of trade mark and passing off.

While Getty Images acknowledges that the synthetic output can have differences to the images used in training (the hat and the ice cream imposed on the cat), this itself causes harm to the content and trade mark owners: “the synthetic images generated by Stable Diffusion distort and/or manipulate the underlying image from which it was copied, which is prejudicial to the reputation of the author of the original work.”

Stable Diffusion

In its defence, Stability AI essentially argues that Getty Images misses the whole point of generative AI, which is “to generate new and original synthetic images which do not correspond to any particular previous example processed by the model during training” - i.e. there are not that many ‘real world’ photographs of cats wearing silly hats eating ice cream!

Stability AI acknowledges that Getty Images were used in training its diffusion model and that this involved “temporary copying” to create the training dataset.

However, Stability AI mounts two key arguments:

that copying did not occur in the UK. While Stability AI’s founder is located in the UK, it argues that it used AWS cloud computing resources to store the training database, with the relevant AWS servers are located in the US, and that the training and model development was undertaken by Stability AI employees based outside the UK. Stability may be hoping to take advantage of a perceived broader ‘fair dealing’ exemption under US copyright law. The UK High Court denied Stability AI’s earlier application to grant it summary judgment on this jurisdiction basis (thus dismissing the case). While the judge observed that ‘if this were the trial of this action, the evidence to which I have referred above would (on its face) provide strong support for a finding that, on the balance of probabilities, no development or training of Stable Diffusion has taken place in the United Kingdom’, that evidence was not sufficient to meet the higher burden of proof on Stability AI for summary judgment to be given.

by, in effect, trying to break the chain Getty asserts between an individual input to training and the synthetic output in response to user prompts. Stability AI argues that “a clear distinction [needs to be made] between the process of training a latent diffusion model and subsequent use of the pre-trained model weights by a user”.

As to the training and development phase, Stability AI says that “it forms no part of the training process for Stable Diffusion to “memorise” or otherwise reproduce individual images from the training dataset.” Instead, in its view the diffusion process works as follows:

the training data (including those copied Getty Images) is used to adjust the weights in the model “through a process known as back-propagation by reducing the predicted error rate of the model across the training dataset against an expected output for each element in the training dataset”.

the encoded model weights resulting from the training process do not reproduce all or a substantial part of any indiviudual image from the training data set: “[i]nstead, they comprise an original database of optimised parameter values in the form of data structures representing numbers and weights”.

as a result, as a result, Stable Diffusion or any individual element of the model contains no copy of any of the training content.

Coming to use of the developed model by an end user, Stability AI argues that the process is as follows:

the synthetic image outputs begin as random noise images, which necessarily do not comprise any part of the original copyrighted works included in the training database.

the random noise images are processed in response to the text prompt from the user and the information from the weighs database working together to produce the synthetic images.

therefore, if there is any resemblance between a synthetic output and a copyrighted work that technically cannot be the result of (i) the inclusion of the copyrighted work in the training database or (ii) any element of the intellectual creation of the author of the copyrighted work.

Stability AI says that this is illustrated by the fact that, because the output images for each user prompt are diffused from random noise (which are necessarily different each time), Stable Diffusion will reproduce variable images from the same or similar text prompts. This means that no particular image can be generated from any particular prompt.

In response to Getty Images evidence of outputs from prompts which were identical or very similar to images on the Getty websites (including bearing Getty watermarks and trademarks), Stability AI argues that:

Each of those images arose from eccentric and contrived use of the user If and insofar as a user is able to generate an output image which contains elements that correspond to elements of a training image, the resulting output merely reflects the user’s prompt being designed to generate images containing those elements. Such output is not derived from the training image itself, but rather from the prompt designed by the user.

Finally, section 30A of the UK Copyright, Designs and Patents Act provides that fair dealing with a work for the purposes of caricature, parody or pastiche does not infringe copyright in the work. Stability AI argues section 30A applies to the synthetic output generated in response to a user prompt because:

Such an image is a pastiche in that it is an artistic work in a style that may imitate that of another work, artist, or period, or consisting of a medley of material imitating a multitude of elements from a large number of varied sources of training material.

There are also pending cases between AI developers and content owners in the US. The link below is to the New York Times’s originating pleading in its US case against OpenAI which includes examples of text outputs side-by-side with the original New York Times articles. In response OpenAI has asked a federal judge, reflecting the Stability AI argument in the UK case about ‘eccentric and contrived use’ (although put in more colourful US-litigation language), to dismiss parts of the New York Times' lawsuiton the basis that the newspaper "hacked" its chatbot ChatGPT and other artificial-intelligence systems to generate misleading evidence for the case.

Read more: United States District Court

Peter Waters

Consultant