While open source software has been part of the ecosystems of previous digital technologies, open source foundation models potentially will play a much more instrumental role in the AI ecosystem, with the hoped-for benefits spanning innovation, competition, the distribution of decision-making power, and transparency.

What is open source AI?

A recent paper from Stanford’s Human-centered AI institute (HAI) defines open source AI as a foundation model for which the weights are widely available, although this need not be accompanied by the open release of any other development assets (e.g. code or data). Weights represent the distilled mathematical relationships which an AI model has learned from its training materials.

Open source AI has some big backers. In December 2023, Meta and IBM launched a new group called the AI Alliance advocating for an “open-science” approach to AI development. President Biden’s Executive Order on AI directs the US Commerce Department to report by mid-2024 with recommendations on how to manage the potential benefits and risks. The EU’s new AI Act seeks to incentivise open source AI by imposing a (somewhat) lighter regulatory regime than applies to general (closed source) AI models.

How does open source AI perform compared to closed source?

While it is widely believed that open-source LLMs such as Meta’s Llama-2 lag behind closed source models such as Open AI’s ChatGPT and Anthropic’s Claude, a December 2023 ‘survey of surveys’ found a more mixed, and rapidly changing picture of comparative performance:

The overall performance of closed source AI is still superior to open source AI, but the gap is closing. For example, on general conversational tasks, Llama-2-chat-70B achieves a 92.66% win rate on tests, surpassing the performance of the closed source GPT-3.5-turbo by 10.95%, although GPT-4 remains the top performer among all LLMs, open and closed source, with a win rate of 95.28%.

But open source AI outperforms closed source AI on some individual capabilities. Lemur-70B-chat outperforms GPT-3.5-turbo when exploring the environment or following natural language feedback on coding tasks. Gorilla, fine-tuned from Llama-7B, outperforms GPT-4 on some tool functions.

Where open source AI really shines is in application or domain-specific models. MentaLlama-chat-13B outperforms GPT-3.5-turbo in mental health analysis datasets, while radiology-Llama2 can improve performance on radiology reports.

However, in one of the most important dimensions for AI, GPT-3.5-turbo and GPT-4 remain unbeatable in demonstrating safer and more ethical behaviour, principally due to the large scale human monitored training (called RLHF).

What are the benefits of open source AI over closed source AI?

HAI identified two key benefits.

First, open source AI models better support innovation, for the following reasons:

releasing weights (and associated computational artifacts made available, such as activations and gradients) permits a wide range of adaptation methods, such as fine-tuning, to build downstream applications. HAI observes that that “[w]hile some closed foundation model developers permit certain adaptation methods (e.g. OpenAI allows fine tuning of GPT 3.5 as of January 2024), these methods tend to be more restrictive, costly, and ultimately constrained by the model developer’s implementation.” AI is also increasingly important to scientific research. But because updates and changes in successive versions of closed source AI is a ‘black box’, it can be difficult for researchers to be confident in attempting to replicate and test experiments, such as in drug research. and

developers also can directly deploy open source AI on their own local hardware, which removes the need for transferring data to the original model developer. HAI observes that “[t]his allows for the direct use of the models without the need to share sensitive data with third parties, which is particularly important in sectors where confidentiality and data protection are necessary—such as because of the sensitive data needs”, such as healthcare.

Second, open source AI models potentially create more technological and competitive diversity in AI markets. HAI says:

By design, closed models contribute to the rise of algorithmic monoculture. Broad access to model weights and greater customizability further enable greater competition in downstream markets, helping to reduce market concentration at the foundation model level from vertical cascading.

Open source AI models lower costs of entry for developers. Open AI is reported to have invested US$100 million in training ChatGPT4. By contrast, the Llama 2 series of open source models required 3.3 million GPU hours on NVIDIA A100-80GB GPUs at February 2024 cloud computing rates of $1.8/GPU hour, totalling around US$6 million to train.

The argument between open and closed source AI models often takes on a ‘philosophical’ bent. Meta’s chief AI scientist, Yann LeCun, has said :

In a future where AI systems are poised to constitute the repository of all human knowledge and culture, we need the platforms to be open source and freely available so that everyone can contribute to themOpenness is the only way to make AI platforms reflect the entirety of human knowledge and culture.

The big disadvantage of open source models - which may mean closed source models stay a nose ahead - is that their developers do not have access to user feedback and interaction logs that closed model developers do for improving models over time.

The risks of open source AI

Open source AI gets a ‘bad wrap’ as being risker than closed source AI models because direct access to weights gives malevolent actors as much scope to ‘innovate’ as bona fide developers and the original developer has no inability to monitor, moderate, or revoke access to mitigate these risks.

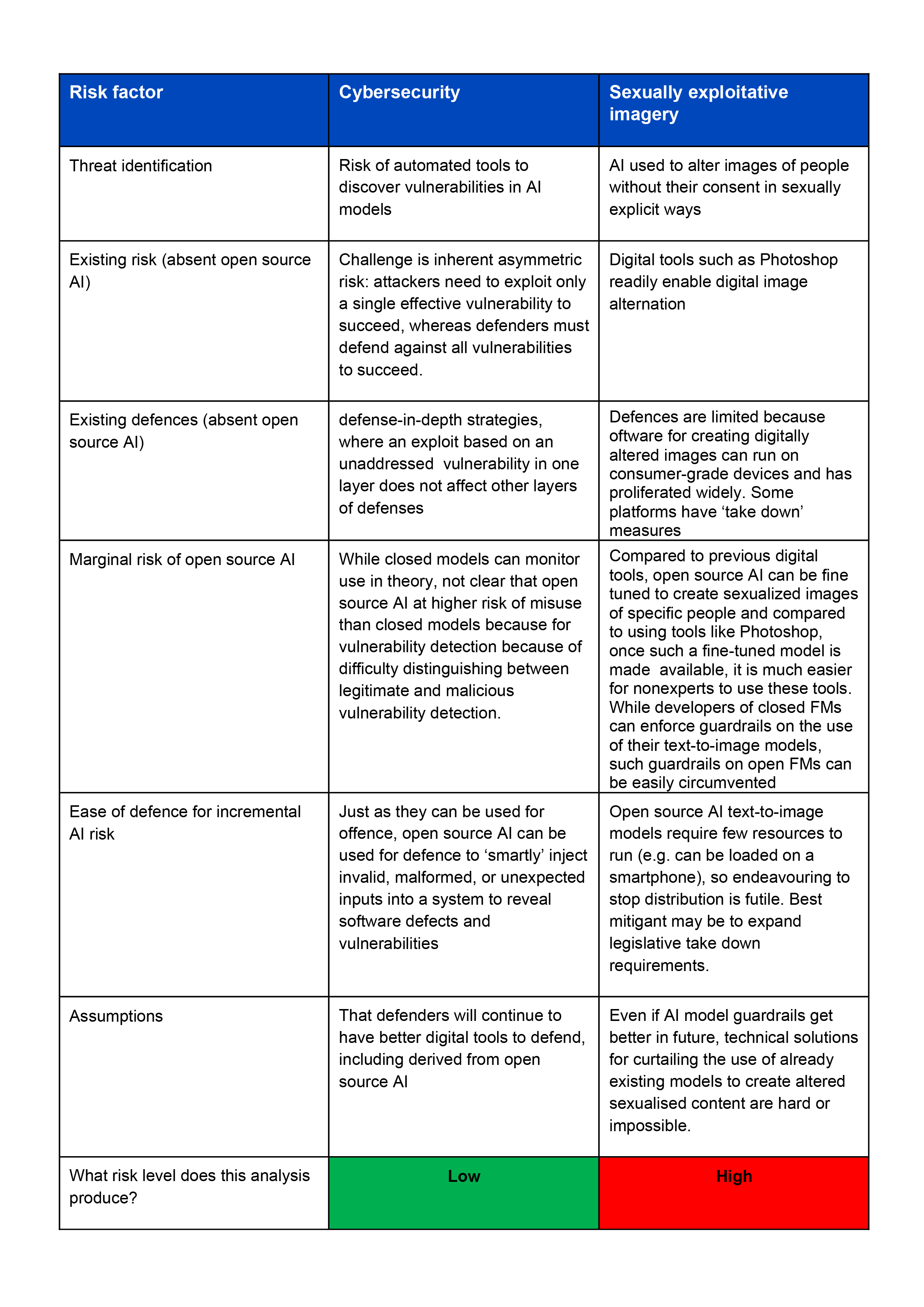

However, HAI argues that a more nuanced view needs to be taken of the marginal risks presented by open source AI over closed source AI and other digital technologies. HAI proposes a 6 point assessment tool, as follows

systematically identify and characterize the potential threats being analyzed, in a ‘neutral’ fashion between open source AI and closed source AI.

given these identified threats, the analyses should clarify the existing extent and level of the existing risk (in a world without open source AI).

assuming that risks currently exist for misuse, the analyses should clarify what are existing mitigations against these risks and their success (again in a world without open source AI).

pairing the above analysis of existing risks and defenses, the analysis can then identify the marginal risk a threat presents in an open AI model.

having identified the risk ‘delta’ between existing defenses and the marginal risk with open source AI, the analysis then should ask what new defenses can be implemented or existing defenses can be modified to meet that increased risk. The tricky part of this step is to anticipate how defenses will evolve in reaction to risk: for example, open source may also contribute to new know what you know defenses (e.g. the creation of better disinformation detectors)

lastly, know your unknowns. As HAI says:

systematically identify and characterize the potential threats being analyzed, in a ‘neutral’ fashion between open source AI and closed source AI.

given these identified threats, the analyses should clarify the existing extent and level of the existing risk (in a world without open source AI).

assuming that risks currently exist for misuse, the analyses should clarify what are existing mitigations against these risks and their success (again in a world without open source AI).

pairing the above analysis of existing risks and defenses, the analysis can then identify the marginal risk a threat presents in an open AI model.

having identified the risk ‘delta’ between existing defenses and the marginal risk with open source AI, the analysis then should ask what new defenses can be implemented or existing defenses can be modified to meet that increased risk. The tricky part of this step is to anticipate how defenses will evolve in reaction to risk: for example, open source may also contribute to new know what you know defenses (e.g. the creation of better disinformation detectors)

lastly, know your unknowns. As HAI says:

it is imperative to articulate the uncertainties and assumptions that underpin the risk assessment framework for any given misuse risk. This may encompass assumptions related to the trajectory of technological development, the agility of threat actors in adapting to new technologies, and the potential effectiveness of novel defense strategies.

HAI demonstrated how its 6 point risk analysis would treat two possible threats: cybersecurity intrusions into an application built using open source AI; and use of open source AI tools to create sexually explicit images of a real person (e.g. revenge porn).

Recommendations

HAI make the following recommendations to promote open source AI.

First, there needs to be a clearer allocation of responsibility between upstream and downstream developers for ensuring open source AI models are safe and responsible. The upstream developers should be transparent about both the responsible AI practices they have implemented and the responsible AI practices they recommend or delegate to the downstream developers - and those downstream developers need to make sure they ask the right questions and take onboard their own responsibilities.

Second, policymakers need to proactively assess the impacts of proposed regulation on open source AI, given the different characteristics and channel or distribution structure in the market compared to closed source AI. HAI comments that “[p]olicies that place obligations on foundation model developers to be responsible for downstream use are intrinsically challenging, if not impossible, for open developers to meet.”

Third, while open source AI has theoretical benefits in catalyzing innovation, distributing power, and fostering competition, competition regulators need to invest in measuring whether and how much these benefits actually play out in AI markets.

Read more: On the Societal Impact of Open Foundation Models

Peter Waters

Consultant