In an article earlier this year , we reviewed an Oxford University study that found that ‘[r]ecent advances in artificial intelligence (AI) have caused an explosion of interest in “creative AI”’ across a range of creative mediums: from fine art, poetry and play writing. Data has been transformed into pigment and algorithms have been transformed into paint brushes. An AI work recently won the Colorado State Fair’s annual art competition.

Creative AI has now taken its biggest leap forward with Stability.ai’s release on 22 August 2022 of Stable Diffusion, a publicly accessible image generator service which can generate photorealistic art which mimics human creativity. Try it - you won’t be able to stop!

What you need to know

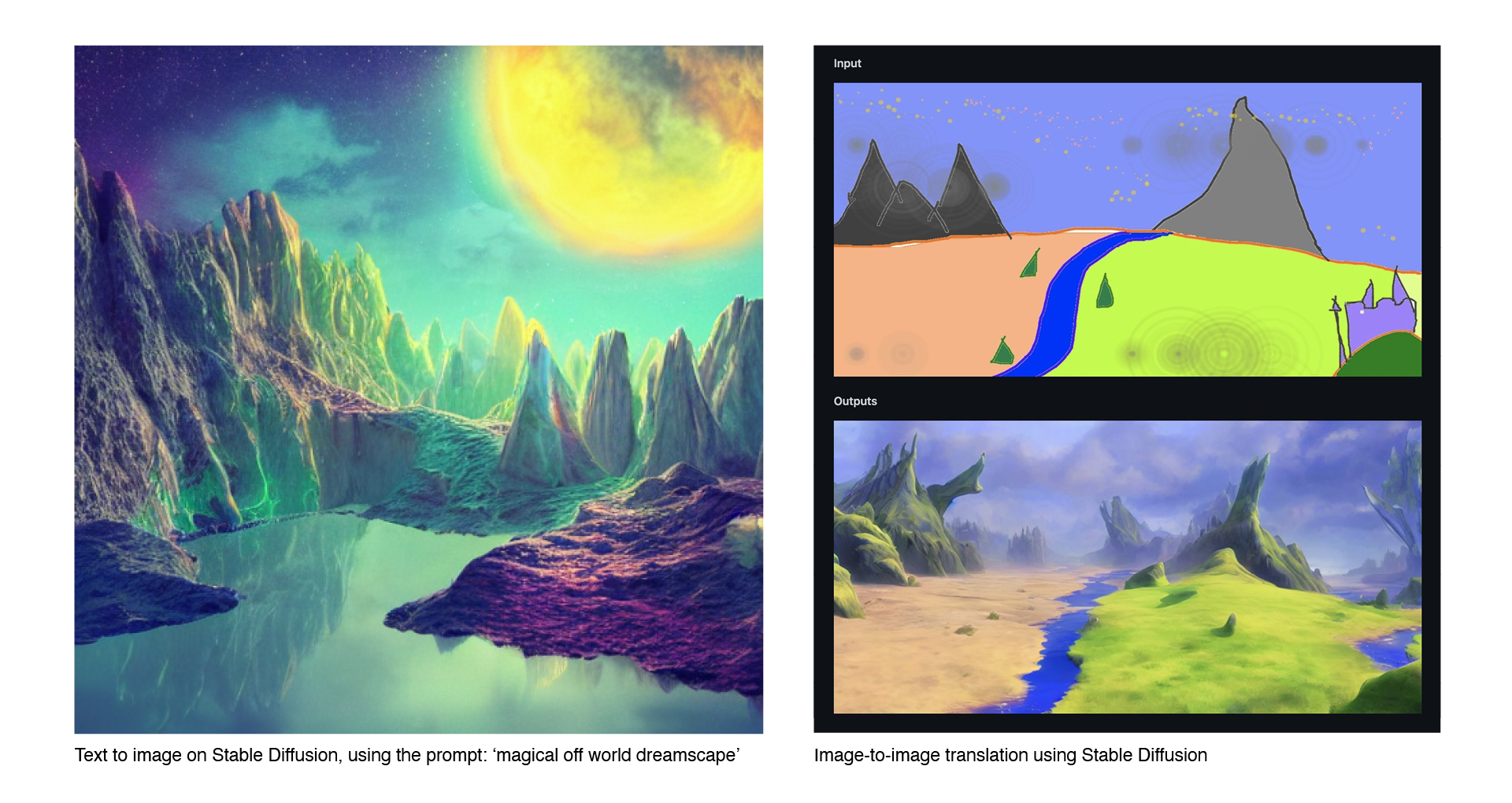

Similar to previous models DALL-E 2 , and Midjourney , Stable Diffusion creates photorealistic images by using text descriptions. Users enter a prompt, and the algorithm generates an image from that specification. However, Stable Diffusion differs from existing photo generators in several ways.

Unlike existing platforms, Stable Diffusion is available as an open-source - that is, the code underlying the AI and the model it is trained on, are also publicly available. This is a drastic shift from other tech companies such as OpenAI or Google, which have previously limited the use of their AI models. Stable Diffusion says its mission is to democratise AI capabilities:

"We look forward to the open ecosystem that will emerge around this and further models to truly explore the boundaries of latent space"

Stability.ai’s CEO and founder, Emad Mostaque opines that the profitability of AI models will come from commercialising the training of private AI models and scale infrastructure. Further details regarding Stability.ai’s business model are yet to be provided.

Stable Diffusion also claims to deliver a ‘breakthrough in speed and quality’ and is marketed as being able to run on consumer graphic processing unit (GPU) which are under 10 GB of Video Random Access Memory (VRAM). Stability.ai also announced that they ‘will also release optimisations to allow [Stable Diffusion] to work on AMD, Msacbook M1/M2 and other chipsets’.

What can

Stable Diffusion do?

The current capabilities of Stable Diffusion allow users to:

Convert text into novel images at 512x512 pixels in seconds.

Use image modification, via image-to-image translation and upscaling, to transform an existing image into a new image.

Use GFP-GAN modelling which allows users to upload a blurred face which is then upscaled or restored.

Convert text into novel images at 512x512 pixels in seconds.

Use image modification, via image-to-image translation and upscaling, to transform an existing image into a new image.

Use GFP-GAN modelling which allows users to upload a blurred face which is then upscaled or restored.

Contemporary AI models use deep learning neural networks which employ large self-learning algorithms to teach the AI to discern patterns or symbols in data. Self-learning models — such as GPT-3 — represent a milestone in this technique. A system like GPT-3 for example, leverages deep learning on a neural network of approximately 45 terabytes of text data, to produce humanlike text with an air of verisimilitude.

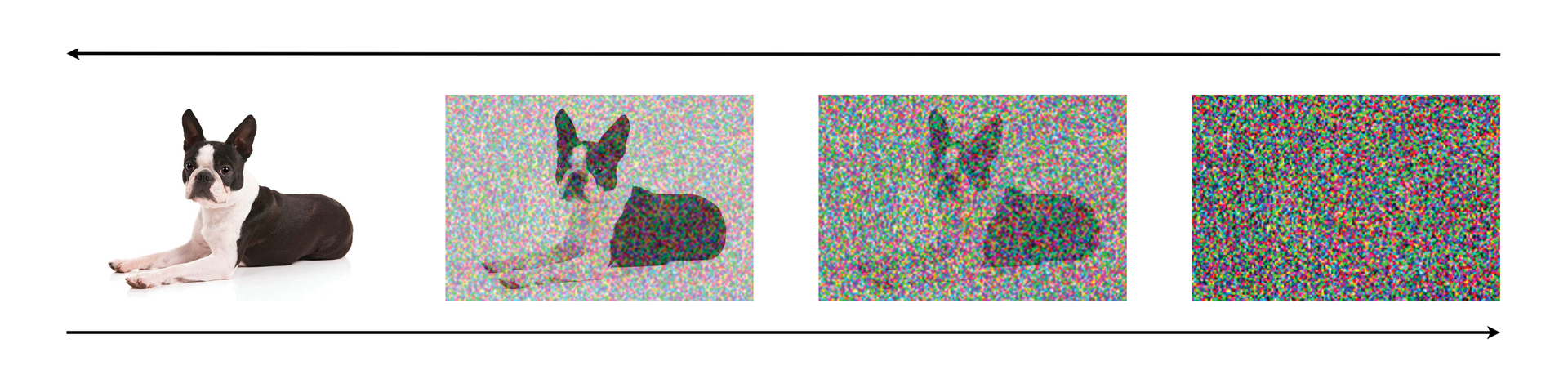

Stable Diffusion forms part of this lineage of deep-learning systems. Specifically, Stable Diffusion learns the connection between image and text through a latent Diffusion Model process. Diffusion models work by taking image data and adding ‘noise’ to it. This noise comprises small dots on the image which destroy the image’s quality. The ‘noise’ slowly wipes out all discernible details in the image until it becomes pure ‘noise’. The model will then learn to reverse the noise, by gradually de-pixelating the image and recovering the image. As Google explains:

"Running this reversed corruption process synthesizes data from pure noise by gradually denoising it until a clean sample is produced."

After training, the model is then able to generate data by processing randomly sampled noise through the learned de-noising process.

The database underlying Stable Diffusion is called LAION-Aesthetics . This database contains images which have been embedded with image-text pairs and have been filtered according to their ‘beauty’. Specifically, the database was fine-tuned by AI models trained to predict the rating people would give images when they were asked “How much do you like this image on a scale from 1 to 10?”. This aims to eliminate pornographic and otherwise disturbing content from forming the basis of the AI’s training.

Notwithstanding this, the end product is not perfect as Stability.ai acknowledges that Stable Diffusion may still ‘reproduce some societal biases and produce unsafe content... ’.

How Stable Diffusion is being regulated

The ethical, moral and legal issues associated with the misuse of AI devices have been well canvassed. These concerns are particularly heightened as Stable Diffusion permits a wider range of images to be generated. For example, Stable Diffusion users may generate images of real people; a feature which is prohibited in comparable AI models, such as DALL-E 2 .

In response to these concerns, Stability.ai emphasised the importance of “ethical and legal” use of the model in its public release announcement . Stability.ai requires distribution of Stable Diffusion and its derivatives to be governed by its Creative ML OpenRAI-M license . The company describes this as ‘a permissive license that allows for commercial and non-commercial usage ’. Relevantly, it provides that:

Users are granted a ‘perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable ’ (subject to certain exceptions) patent license to make, use, sell or offer to sell, import or otherwise transfer, the Model and any of its Complementary Material.

Users cannot use, and agree not to use, the Model or its Derivatives in a way which would cause harm to minors, defame anyone, discriminate against an individual or group or exploit the vulnerabilities of a specific group.

The Licensor does not assert any rights in the output users generate using the model.

Users are granted a ‘perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable ’ (subject to certain exceptions) patent license to make, use, sell or offer to sell, import or otherwise transfer, the Model and any of its Complementary Material.

Users cannot use, and agree not to use, the Model or its Derivatives in a way which would cause harm to minors, defame anyone, discriminate against an individual or group or exploit the vulnerabilities of a specific group.

This license operates as a form of social agreement between Stable Diffusion and its users, as it relies on users self-regulating their own conduct and ‘doing the right thing’. However, the license itself does not have any teeth; users cannot be penalised if they fail to comply with the license. Stable Diffusion’s software is hard-wired with an adjustable AI tool, Safety Classifier , which is designed to remove undesirable outputs. However, Stable Diffusion acknowledges that ‘[t]he parameters of this tool] can be readily adjusted ’, meaning that users can disable its functionality.

Many countries, including Australia, do not have any specific laws which regulate the use (and misuse) of AI models. The lack of government oversight means that technology like this is ripe for exploitation bad actors.

The implications for the future of AI art models

Emad Mostaque states that ‘[w]e plan to use our compute to accelerate open source, foundational AI ’. Mostaque envisages future developments in AI art models as encompassing ‘local GPU usage, animation, logic-based pipeline flow with more such as inpainting, dynamic variation, and latent space exploration to come. ’ The accessibility of a sophisticated AI model and source code will undoubtedly propel further milestones in AI capabilities. As stated by Stability.ai:

"This technology has tremendous potential to transform the way we communicate and we look forward to building a happier, more communicative and creative future with you all."

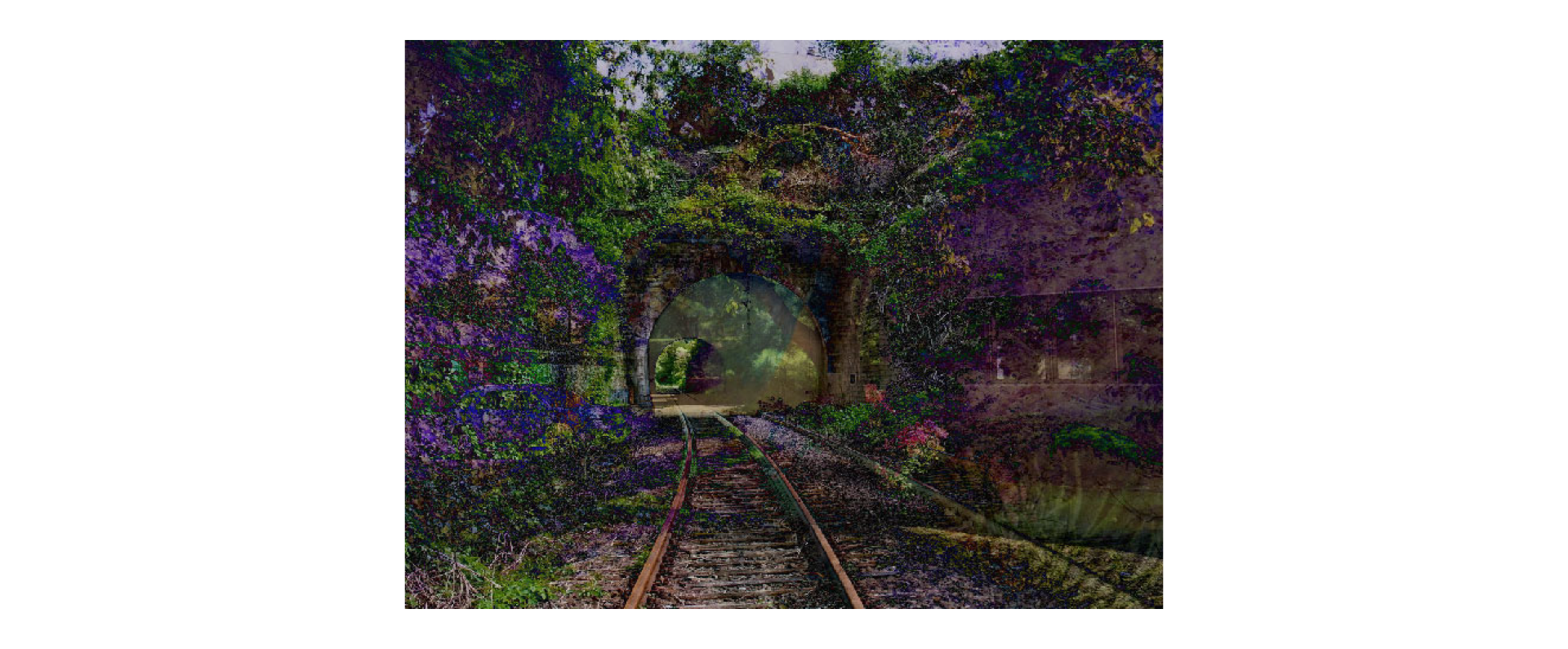

Beyond image generation, sophisticated AI-generated video models are also on the horizon. DeepFake videos of celebrities are becoming an entrenched feature of online pop culture. The earlier DeepFake video technology relied on having a large library of existing images to seed the algorithm. However, AI video generation technology is rapidly accelerating beyond this limitation. For example earlier this year Microsoft’s Asia research team introduced NUWA-Infinity , a generative model which, amongst other things, can create videos from text inputs or sketches. is currently unavailable to the public, however, similar devices such as CogVideo have just been publicly released as open source. With time, these models will only become increasingly more sophisticated and we are likely to witness further ground-breaking developments in AI technology.

But is it art?

The US Copyright Office says absolutely not. In February 2022, the Office’s Review Board rejected an application a two-dimensional artwork claim in the work titled 'A Recent Entrance to Paradise' (pictured below) which was generated by an AI.

The Review Board accepted that ‘as a threshold matter...the Work was autonomously created by artificial intelligence without any creative contribution from a human actor ’. The Review Board noted that the US Copyright Act affords protection to ‘original works of authorship ’ that are fixed in a tangible medium of expression and that the phrase ‘“original work of authorship” was purposely left undefined by Congress ’. However, the Board considered that while the term is ‘very broad ’, its scope is not unlimited. Reviewing the legal authorities, the Review Board concluded that ‘human authorship is a prerequisite to copyright protection ’, and there needed to be a ‘nexus between the human mind and creative expression ’. The recent Full Federal Court decision in Commissioner of Patents v Thaler , reflects the US approach. In that case, the Full Court unanimously held that an AI computing system, being a non-human, could not be named as an ‘inventor ’ on an Australian patent, as such systems do not contribute to the ‘inventive concept ’ underlying the patent.

Yet AI art has appeal - in 2018, Christie’s sold its first piece of auctioned AI art—a blurred face titled ‘Portrait of Edmond Belamy ’ — for $432,500. Rutgers' Art & AI Lab has been showing in galleries AI generated art works which have proven highly popular:

"We found that people couldn’t tell the difference: Seventy-five percent of the time, they thought the AICAN-generated images had been produced by a human artist. They didn’t simply have a tough time distinguishing between the two. They genuinely enjoyed the computer- generated art, using words such as “having visual structure,” “inspiring” and “communicative” when describing AICAN’s work."

The programmer behind the AI which won the Colorado art prize has said ‘Art is dead, dude. It’s over. A.I. won. Humans lost ’.

But in probably the best statement of why AI generated images are not art, the Rutgers AI lab concluded:

"Still, there’s something missing in [AI’s] artistic process: The algorithm might create appealing images, but it lives in an isolated creative space that lacks social context. Human artists, on the other hand, are inspired by people, places, and politics. They create art to tell stories and make sense of the world."

Read more: 'Second Request for Reconsideration for Refusal to Register A Recent Entrance to Paradise'

Peter Waters

Consultant