While AI and robotics are often spoken of in the same breath, integrating AI as the ‘brain’ and robots as the ‘arms and legs’ is challenging. However, we now appear to be on the brink of AI-powered robots which, in the words of Intel:

have the ability to collect, analyse and act on information about their surroundings in near real-time to complete tasks, often autonomously…Using conversational AI, [autonomous mobile robots] or humanoid robots with every interaction...will capture dialogue, process it, respond and learn in anticipation of the next interaction.

What were the challenges to integration and how are they being overcome?

Overcoming challenge 1: speaking different languages

A robot’s instructions to move through its surrounding physical environment are expressed in Cartesian space, defined (as in a graph) by two perpendicular horizontal axes, the x-axis and the y-axis, and the vertical z-axis to make a 3D space. The robot has three primary motions: linear (A to B in a straight line), joint (A to B by a non-linear, potentially unpredictable path) and arc (moving around a fixed point in a constant radius). For example, instructing a robot with x=100, y=0, means moving straight ahead 100 mm.

Large language models (LLMs) don’t perceive or interact with the outside physical world but operate in their own heads (that is, applying the billions of parameters on which they have been trained). They learn, receive questions and provide outputs in terms of semantics, labels and textual prompts.

As a result, LLMs produce outputs in words but the robots need inputs in combinations of the x, y and z axes. LLMs function by predicting what word is next and can answer the question in subtly different ways when asked by different users or at different times. Robots need fixed, grounded and highly precise instructions on how to move through their three-dimensional Cartesian world.

In 2024, Google DeepMind researchers achieved a breakthrough in this 'AI is from Mars and robots are from Venus' problem. They started with powerful vision-language (VLM) AI models (they used PaLI-X and PaLM-E), which learn to take vision and language as input and provide free-form text and are used in downstream applications such as object classification and image captioning. But instead of producing text outputs, the VLMs were trained on robotic trajectories (that is, how a robotic limb would move) “by tokenising the actions into text tokens and creating multimodal sentences that respond to robotic instructions paired with camera observations by producing corresponding actions".

By getting the AI to directly think actions, not words, the AI can produce instructions following robotic policies: that is, move the robotic arm in a linear, joint or arc motion along the x-axis, y-axis or z-axis.

Overcoming challenge 2: the world seen from different robot perspectives

Machine learning relies on massive data sets to learn. LLMs have access to the vast – and sometimes toxic – pool of human-created content on the Internet, expressed and stored in a consistent format.

Robots take many different forms, from floor level vacuum cleaners to single-armed factory robots, quadrupeds, drones and humanoid robots. They are equipped with very different tools, from screwdrivers and claws through to the recently released F-TAC Hand, which integrates high-resolution tactile sensing across an unprecedented 70% of its surface area, allowing for human-like adaptive grasping.

This means the growing army of robots varies widely in their camera views, Cartesian positions, proprioceptive inputs (the robot's sense of its own position and movement in space), action outputs and control frequencies. The narrow lens through which individual robots see the real world means, unlike the Internet for LLMs, there is not a large pool of visual data on which to train robots.

Researchers from UC Berkeley and Carnegie Mellon Universities (Doshi, Walke et al) have proposed solving this problem by using a scalable and flexible LLM transformer-based policy that “can leverage much broader and more diverse datasets, which in turn can lead to better generalisation and robustness” (called CrossFormer).

The researchers used a transformer neural network, which was the breakthrough technology for LLMs, because it enables encoding all available observation types from robots (text, image, sound and so on) and breaking down observation data into sequences of time-steps where each time-step contains image observations, proprioceptive observations and an action. The researchers identified 20 different types of robot action (embodiment) varying widely in observation space, action space and control frequency. Across this group of embodiments, the transformer was trained on images from a single-armed robot and then progressively data was added from other robot types (such as two-armed robots and quadruped robots).

Essentially, ‘flattening’ a wide range of robot actions into a sequence-by-sequence analysis produces a set of network weights (learned by the AI model from this process) which can control vastly different robots, including single and dual arm manipulation systems, wheeled robots, quadcopters and quadrupeds. The cross-embodiment approach not only increases the data pool for training, but allows the AI robot to apply its sequence-by-sequence learnings in situations on which it has not been explicitly trained. For example, without any manual training by humans on action-space alignment (for example, how not to crash into walls), CrossFormer can navigate trajectories both indoors and outdoors, as well as on out-of-distribution paths. The researchers’ hypothesis suggest it’s due to their more flexible training approach allows a larger number of different camera views to be synthesised at each sequence step (more angles, including third party images looking at a robot in action, helps the AI process how to turn sharp corners).

CrossFormer outperformed specialised single-task robots trained on data about that task (73% vs 67% success rate), but even more impressively exceeded the current best cross-embodiment training (73% vs 51% success rate).

Overcoming challenge 3: “sorry, I haven’t been trained on that”

Machine learning algorithms, while powerful, struggle with true generalisation. They excel in controlled, well-defined environments but falter when confronted with scenarios that deviate from their training data. This limitation becomes particularly pronounced in robotics where adaptability and context understanding are paramount. A robot trained in a laboratory setting might completely fail when deployed in a real-world, unpredictable environment.

LLMs draw on pattern recognition to predict the answer to prompts – and the reason they are trained on so much data is to learn virtually any pattern (of fact or fiction) that has ever occurred. By contrast, humans somehow develop from a much smaller dataset a ‘world model’ in our minds which allows us, based on experience, to apply commonsense to solving problems we have not yet experienced.

AI developers claim they have created AI capable of thinking, using a chain of reasoning (for example OpenAI’s o1/o3 and Anthropic’s Claude 3.7 Sonnet Thinking). While this is disputed, including by Apple, integrating “thinking” AI with robots could substantially improve their adaptability.

A recent study by Google’s DeepMind (Zhao, Wahid et al) shows some modest evidence of generalisation by AI-powered robots. The Google researchers noted the challenges robots have faced to date in simple tasks:

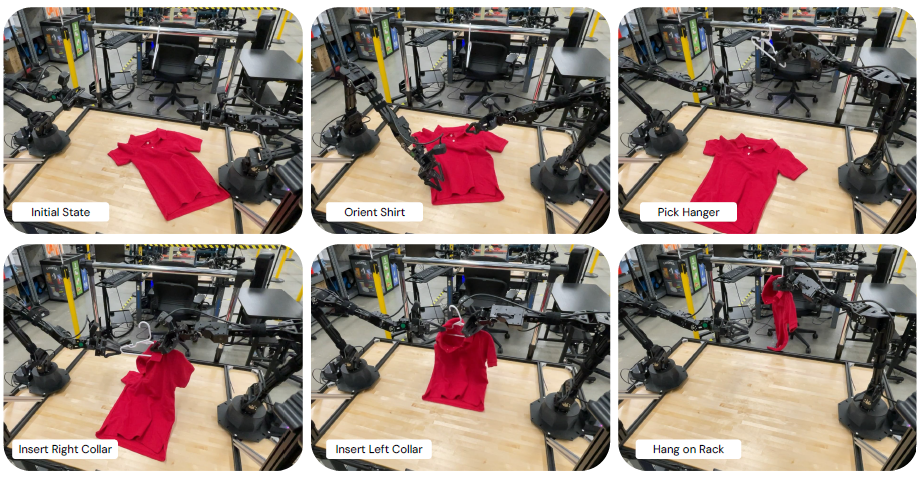

Dexterous manipulation tasks such as tying shoelaces or hanging T-shirts on a coat hanger have traditionally been seen as very difficult to achieve with robots. From a modeling perspective, these tasks are challenging since they involve (deformable) objects with complex contact dynamics, require many manipulation steps to solve the task and involve the coordination of high-dimensional robotic manipulators, especially in bimanual setups and generally often demand high precision.

The Google researchers trained AI on several tasks including hanging a T-shirt. The detailed steps include flattening the shirt, picking a hanger off a rack, performing a handover, picking up the shirt, precisely inserting both sides of the hanger into the shirt collar, then hanging the shirt back on the rack.

The visual training data was gathered through the ALOHA platform which allows bimanual teleoperation via a puppeteering interface, which involves a human teleoperator to drive two smaller leader arms, whose joints are synchronised with two larger follower arms: that is, rather than seeing human arms undertaking the task (which the AI would not be able to associate with its own required actions), the AI sees familiar robot arms (puppeteered by humans) doing the task.

Trained on this data, the AI-powered robot was able to successfully complete hanging a T-shirt 75% of the time.

While the AI-powered robots were being asked to perform the exact task on which they had been trained, the Google researchers said they saw some promising signs of generalisation:

In several cases, the T-shirt fell off the hanger and the robot would pick it up and rehang it.

While T-shirts seen in the training set were only kid’s sizes with short sleeves and red, white, blue, navy and baby blue colours, the robot could handle hanging a grey adult-sized T-shirt.

If the T-shirt was plus or minus 60 degrees tilted and wrinkled compared to the training data, the robot could learn good behaviours for flattening and centring the shirt and then going on to hang it

However, the Google researchers also observed that there were limits to the adaptability: the AI-powered robot was confused if the T-shirt was upside down on the table “since there are no instances of this in the training set”. The researchers also note that “the policy replans every one second, which may not be fast enough for very reactive tasks”: that is, it’s a relatively slow thinker!

Conclusion

Meta’s Chief AI scientist, Yann LeCunn argues that LLMs are a dead end – that they will never get past pattern matching. He argues that the next leap forward must be the development of AI with their own ‘world model’ and that this is likely to happen in the world of robots over the next three to five years:

We don’t have robots that can do what a cat can do – understanding the physical world of a cat is way superior to everything we can do with AI. Maybe the coming decade will be the decade of robotics, maybe we’ll have AI systems that are sufficiently smart to understand how the real world works.

Peter Waters

Consultant