Last month Meta released its V-JEPA 2 AI model which can power robots that can understand what’s happening around them in a changing physical world, predict future states and use those predictions to plan the best course of action. V-JEPA 2 can ‘think’ in this way because it has been taught, like humans, a model of the real world.

What is a world model?

Humans hold a mental model of the world, allowing them to adapt to new tasks and navigate unfamiliar situations that we’re confronted with daily. From an early age, we ‘get the hang’ of how the real world works.

As it’s not possible to remember the vast amount of information that bombards us every day, the human brain learns an abstract representation of spatial and temporal aspects of this information. Humans use this world model in processing new inputs gathered by our senses. Some might call this commonsense, experience or intuition.

Two leading experts on world models, Ha and Schmidhuber, give the following example of a baseball player swinging at a ball:

The batter has milliseconds to decide how they should swing the bat – shorter than the time it takes for visual signals to reach our brain. The reason we are able to hit a 100 mph fastball is due to our ability to instinctively predict when and where the ball will go. For professional players, this all happens subconsciously. Their muscles reflexively swing the bat at the right time and location in line with their internal models’ predictions. They can quickly act on their predictions of the future without the need to consciously roll out possible future scenarios to form a plan.

By contrast, current large language models (LLMs) are ‘prisoners’ of the finite world they know. Trained on vast datasets scraped from the internet, they absorb patterns across recorded human knowledge. These patterns are encoded in the model’s hundreds of billions of parameters. LLMs draw on this information to make the predictions needed to respond to prompts from users, but they are not very good at dealing with unknown variables or different possible actions not represented within their training data.

The difference between how humans and current LLMs ‘think’ gives rise to the Moravec paradox: tasks that are difficult for humans, such as solving maths problems, are simple for AI to handle because they can be described and modelled easily. In contrast, tasks that are simple for a one year old human, are difficult or impossible for AI because they require common sense applied to spatial awareness.

This limitation of current LLM architecture is even more evident with AI powered robots. The real world is so complex, changing and unpredictable that it cannot be captured in any training dataset, no matter how massive. This makes it essential for robots to develop an AI version of a world model.

How does Meta’s model work?

Meta’s JEPA (Joint Embedding Predictive Architecture) model is not the first AI with an inbuilt world model. Nvidia released its Cosmos model in early 2025, describing it as “the ChatGPT moment for robotics”. Meta’s model appears to be the next, bigger step forward in world models.

To be able to successfully tackle a new scenario, a world model AI requires the following:

Understanding the motions involved: V-JEPA 2 was trained on over 1 million hours of video to encode fine grained types of motion or actions. V-JEPA 2 was trained using self-supervised learning, which allows training on video without requiring additional human labelling. The video length was extended from the 16 frames typically used in earlier multi-modal models to 64 frames (16 seconds) to provide richer training data on each motion. The number of training iterations also was extended from 92K to 252K. This training gives the AI a model of how people interact with objects, how objects move in the physical world and how objects interact with other objects. Yet the model which is relatively economical to run: V-JEPA 2 has 1.2 billion parameters compared to the hundreds of billions of parameters of LLMs like Clause Sonnet 3.5. This was made possible by focusing the training on the motion itself and not the background detail. For example, learning the trajectory of an object in motion and not the precise location of each blade of grass over which the object is flying.

Understanding video question-answering: V-JEPA 2 will receive instructions in text or spoken language from the user and that needs to be ‘translated’ into a video-based output to execute the action.

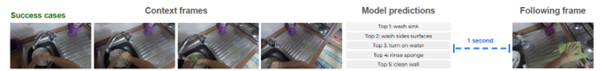

Prediction: to predict the unfamiliar action requested by the user, the V-JEPA 2 robot needs to predict, step-by-step, what the motion would look like, drawing on the world model of different types of actions on which it has been trained. The AI model was taught to predict the next step in a motion by removing ‘masking’ frames in the training videos. It then compared the model’s prediction of the masked frames with the unmasked actual footage, improving through repeated training runs. In the example below, V-JEPA 2 correctly predicts that the most likely frame in one second will show “washing the sink”, but the other predictions are also credible given the presence of a tap and the wall.

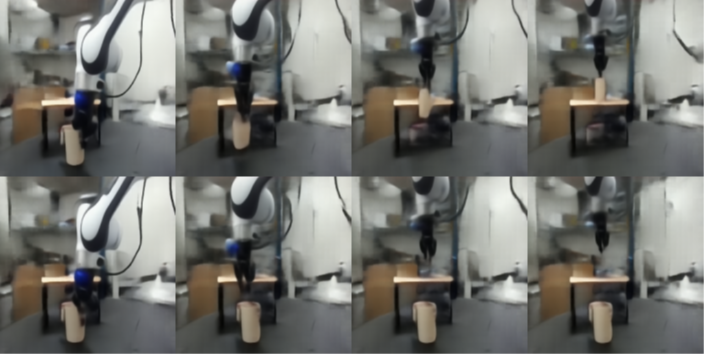

Planning: After pre-training, the V-JEPA 2 model predicts the motion required to perform a task, but these predictions need to match the actions the robot’s mechanical limbs can actually execute. V-JEPA 2 was trained on 64 hours of video of a table-top Franka Panda robot arm equipped with a two-finger gripper. The predictor compares the motion predictions with the robot’s capabilities in a shared data space, then selects the option that best fulfills the user’s request. The following images show how this process works, in both examples the model is asked to move a cup. In the first sequence, it is instructed to use a closed gripper, allowing it to pick up the cup and move it to the table. In the second sequence, it is instructed to use an open gripper, in which case the cup remains on the floor because the robot cannot pick it up.

How does V-JEPA 2 compare to other AI powered robots?

The Meta researchers compare the performance of a V-JEPA 2 powered robot with other AI powered robots, including Nvidia’s Cosmos, on pick-and-place tasks: move a cup and a small box from A to B and the individual tasks of grasping and picking up each and reaching with each ‘in claw’.

All the tested models scored 100% on the reaching function, but the Octo robot successfully grasped a cup and completed the whole pick and place routine only 15% of the time compared to V-JEPA 2’s success rate of 65%. Cosmos had 0% success on grasping a cup although scored a little higher on grasping a box at 20% but scored 0% on the whole pick and place movement for both a cup and a box. Cosmos also took four minutes to think through how to grasp a cup while V-JEPA 2 took 16 seconds.

Where to next?

Humans understand the laws of gravity and physics as part of their world model. Meta has released a new benchmark, IntPhys 2, designed to measure the ability of models to distinguish between physically plausible and implausible scenarios. A game engine generates pairs of videos, where the two videos are identical up to a certain point and then a physics-breaking event occurs in one of the two videos. The AI model must then identify which video has the physics-breaking event.

While humans achieve near-perfect accuracy on this task across a range of scenarios and conditions, current video models are at or near chance – that is, no better than guessing.

V-JEPA 2 is also limited to simple, single dimension tasks like moving a cup from A to B. However, many human tasks, such as baking a cake, involves multiple sub-tasks that humans arrange in an order, conducting some in a necessary sequence and others in parallel, to reach the final goal of a baked cake. Meta’s future focus will be on training hierarchical JEPA models that are capable of learning, reasoning and planning across multiple temporal and spatial scales.

Meta also wants to train JEPA robots that can make predictions using a variety of senses, including vision, audio and touch.

Peter Waters

Consultant